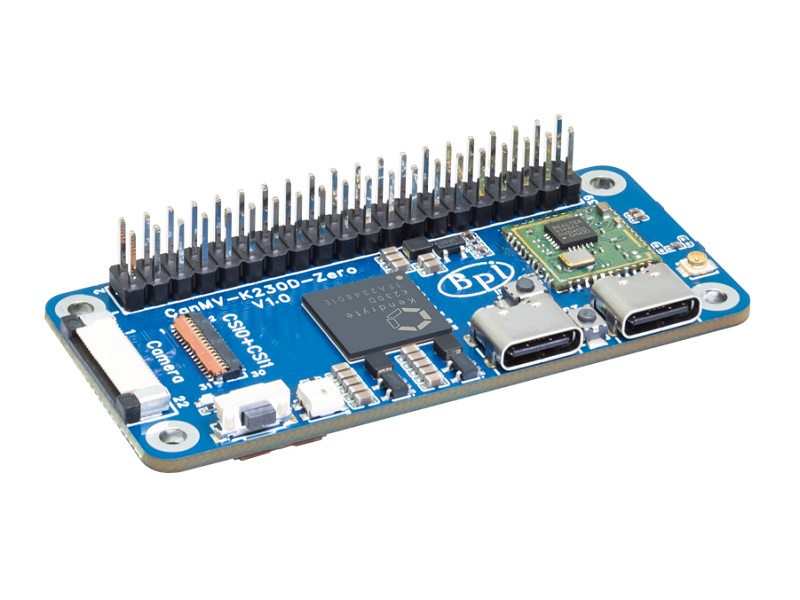

本文介紹了香蕉派 CanMV K230D Zero 開(kāi)發(fā)板通過(guò)攝像頭實(shí)現(xiàn)人體關(guān)鍵點(diǎn)的實(shí)時(shí)動(dòng)態(tài)檢測(cè)識(shí)別的項(xiàng)目設(shè)計(jì)。

https://bbs.elecfans.com/jishu_2493481_1_1.html

項(xiàng)目介紹

- 人體關(guān)鍵點(diǎn)檢測(cè)應(yīng)用使用 YOLOv8n-pose 模型對(duì)人體姿態(tài)進(jìn)行檢測(cè);

- 檢測(cè)結(jié)果得到 17 個(gè)人體骨骼關(guān)鍵點(diǎn)的位置,并用不同顏色的線將關(guān)鍵點(diǎn)連起來(lái)在屏幕顯示。

17 個(gè)人體骨骼關(guān)鍵點(diǎn)包括:鼻子、左眼、右眼、左耳、右耳、左肩、右肩、左肘、右肘、左腕、右手腕、左髖關(guān)節(jié)、右髖關(guān)節(jié)、左膝、右膝蓋、左腳踝、右腳踝。

模型官方文檔詳見(jiàn):COCO8 Pose 數(shù)據(jù)集 -Ultralytics YOLO 文檔.

模型訓(xùn)練過(guò)程詳見(jiàn):訓(xùn)練部署YOLOv8姿態(tài)估計(jì)模型 | Seeed Studio Wiki.

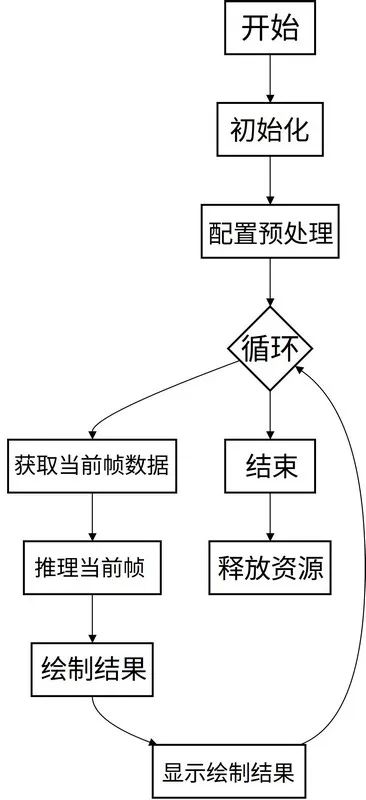

流程圖

代碼

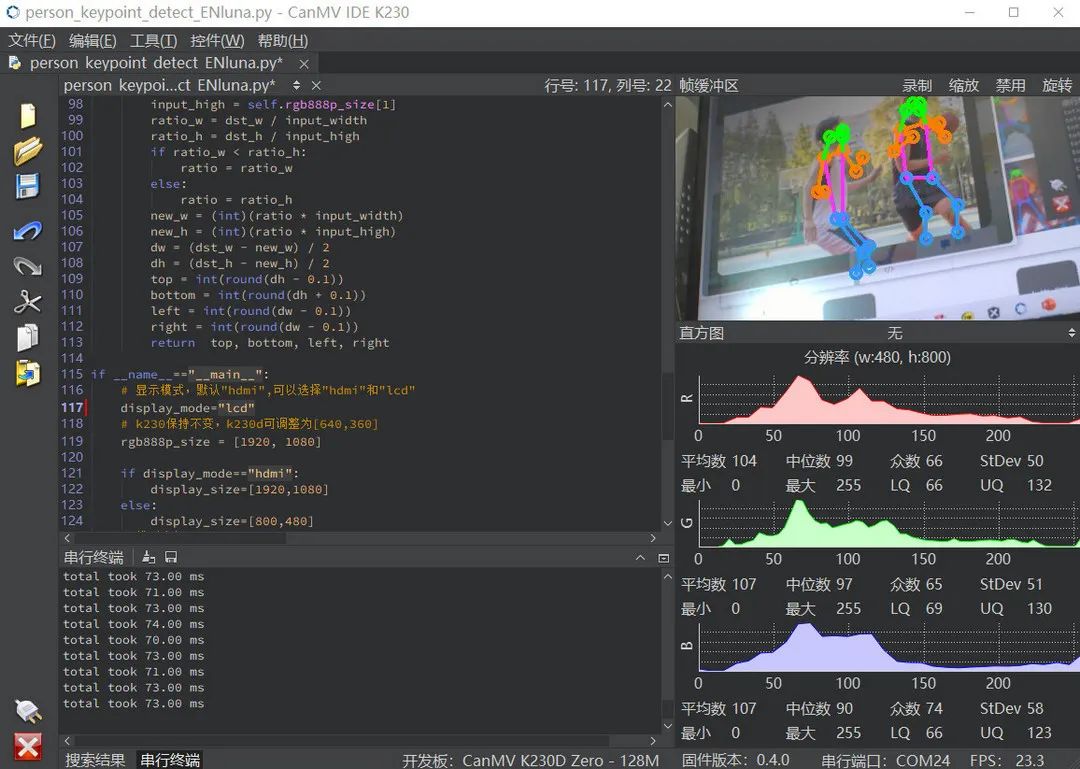

使用CanMV IDE打開(kāi)\CanMV\sdcard\examples\05-AI-Demo\person_keypoint_detect.py文件

fromlibs.PipeLineimportPipeLine, ScopedTiming

fromlibs.AIBaseimportAIBase

fromlibs.AI2DimportAi2d

importos

importujson

frommedia.mediaimport*

fromtimeimport*

importnncase_runtimeasnn

importulab.numpyasnp

importtime

importutime

importimage

importrandom

importgc

importsys

importaidemo

# 自定義人體關(guān)鍵點(diǎn)檢測(cè)類

classPersonKeyPointApp(AIBase):

def__init__(self,kmodel_path,model_input_size,confidence_threshold=0.2,nms_threshold=0.5,rgb888p_size=[1280,720],display_size=[1920,1080],debug_mode=0):

super().__init__(kmodel_path,model_input_size,rgb888p_size,debug_mode)

self.kmodel_path=kmodel_path

# 模型輸入分辨率

self.model_input_size=model_input_size

# 置信度閾值設(shè)置

self.confidence_threshold=confidence_threshold

# nms閾值設(shè)置

self.nms_threshold=nms_threshold

# sensor給到AI的圖像分辨率

self.rgb888p_size=[ALIGN_UP(rgb888p_size[0],16),rgb888p_size[1]]

# 顯示分辨率

self.display_size=[ALIGN_UP(display_size[0],16),display_size[1]]

self.debug_mode=debug_mode

#骨骼信息

self.SKELETON = [(16,14),(14,12),(17,15),(15,13),(12,13),(6, 12),(7, 13),(6, 7),(6, 8),(7, 9),(8, 10),(9, 11),(2, 3),(1, 2),(1, 3),(2, 4),(3, 5),(4, 6),(5, 7)]

#肢體顏色

self.LIMB_COLORS = [(255,51, 153,255),(255,51, 153,255),(255,51, 153,255),(255,51, 153,255),(255,255,51, 255),(255,255,51, 255),(255,255,51, 255),(255,255,128,0),(255,255,128,0),(255,255,128,0),(255,255,128,0),(255,255,128,0),(255,0, 255,0),(255,0, 255,0),(255,0, 255,0),(255,0, 255,0),(255,0, 255,0),(255,0, 255,0),(255,0, 255,0)]

#關(guān)鍵點(diǎn)顏色,共17個(gè)

self.KPS_COLORS = [(255,0, 255,0),(255,0, 255,0),(255,0, 255,0),(255,0, 255,0),(255,0, 255,0),(255,255,128,0),(255,255,128,0),(255,255,128,0),(255,255,128,0),(255,255,128,0),(255,255,128,0),(255,51, 153,255),(255,51, 153,255),(255,51, 153,255),(255,51, 153,255),(255,51, 153,255),(255,51, 153,255)]

# Ai2d實(shí)例,用于實(shí)現(xiàn)模型預(yù)處理

self.ai2d=Ai2d(debug_mode)

# 設(shè)置Ai2d的輸入輸出格式和類型

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT,nn.ai2d_format.NCHW_FMT,np.uint8, np.uint8)

# 配置預(yù)處理操作,這里使用了pad和resize,Ai2d支持crop/shift/pad/resize/affine,具體代碼請(qǐng)打開(kāi)/sdcard/app/libs/AI2D.py查看

defconfig_preprocess(self,input_image_size=None):

withScopedTiming("set preprocess config",self.debug_mode >0):

# 初始化ai2d預(yù)處理配置,默認(rèn)為sensor給到AI的尺寸,您可以通過(guò)設(shè)置input_image_size自行修改輸入尺寸

ai2d_input_size=input_image_sizeifinput_image_sizeelseself.rgb888p_size

top,bottom,left,right=self.get_padding_param()

self.ai2d.pad([0,0,0,0,top,bottom,left,right],0, [0,0,0])

self.ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

self.ai2d.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,self.model_input_size[1],self.model_input_size[0]])

# 自定義當(dāng)前任務(wù)的后處理

defpostprocess(self,results):

withScopedTiming("postprocess",self.debug_mode >0):

# 這里使用了aidemo庫(kù)的person_kp_postprocess接口

results = aidemo.person_kp_postprocess(results[0],[self.rgb888p_size[1],self.rgb888p_size[0]],self.model_input_size,self.confidence_threshold,self.nms_threshold)

returnresults

#繪制結(jié)果,繪制人體關(guān)鍵點(diǎn)

defdraw_result(self,pl,res):

withScopedTiming("display_draw",self.debug_mode >0):

ifres[0]:

pl.osd_img.clear()

kpses = res[1]

foriinrange(len(res[0])):

forkinrange(17+2):

if(k 17):

kps_x,kps_y,kps_s =round(kpses[i][k][0]),round(kpses[i][k][1]),kpses[i][k][2]

kps_x1 =int(float(kps_x) * self.display_size[0] // self.rgb888p_size[0])

kps_y1 =int(float(kps_y) * self.display_size[1] // self.rgb888p_size[1])

if(kps_s >0):

pl.osd_img.draw_circle(kps_x1,kps_y1,5,self.KPS_COLORS[k],4)

ske = self.SKELETON[k]

pos1_x,pos1_y=round(kpses[i][ske[0]-1][0]),round(kpses[i][ske[0]-1][1])

pos1_x_ =int(float(pos1_x) * self.display_size[0] // self.rgb888p_size[0])

pos1_y_ =int(float(pos1_y) * self.display_size[1] // self.rgb888p_size[1])

pos2_x,pos2_y =round(kpses[i][(ske[1] -1)][0]),round(kpses[i][(ske[1] -1)][1])

pos2_x_ =int(float(pos2_x) * self.display_size[0] // self.rgb888p_size[0])

pos2_y_ =int(float(pos2_y) * self.display_size[1] // self.rgb888p_size[1])

pos1_s,pos2_s = kpses[i][(ske[0] -1)][2],kpses[i][(ske[1] -1)][2]

if(pos1_s >0.0andpos2_s >0.0):

pl.osd_img.draw_line(pos1_x_,pos1_y_,pos2_x_,pos2_y_,self.LIMB_COLORS[k],4)

gc.collect()

else:

pl.osd_img.clear()

# 計(jì)算padding參數(shù)

defget_padding_param(self):

dst_w = self.model_input_size[0]

dst_h = self.model_input_size[1]

input_width = self.rgb888p_size[0]

input_high = self.rgb888p_size[1]

ratio_w = dst_w / input_width

ratio_h = dst_h / input_high

ifratio_w < ratio_h:

ratio = ratio_w

else:

ratio = ratio_h

new_w = (int)(ratio * input_width)

new_h = (int)(ratio * input_high)

dw = (dst_w - new_w) /2

dh = (dst_h - new_h) /2

top =int(round(dh -0.1))

bottom =int(round(dh +0.1))

left =int(round(dw -0.1))

right =int(round(dw -0.1))

return top, bottom, left, right

if__name__=="__main__":

# 顯示模式,默認(rèn)"hdmi",可以選擇"hdmi"和"lcd"

display_mode="hdmi"

# k230保持不變,k230d可調(diào)整為[640,360]

rgb888p_size = [1920,1080]

ifdisplay_mode=="hdmi":

display_size=[1920,1080]

else:

display_size=[800,480]

# 模型路徑

kmodel_path="/sdcard/examples/kmodel/yolov8n-pose.kmodel"

# 其它參數(shù)設(shè)置

confidence_threshold =0.2

nms_threshold =0.5

# 初始化PipeLine

pl=PipeLine(rgb888p_size=rgb888p_size,display_size=display_size,display_mode=display_mode)

pl.create()

# 初始化自定義人體關(guān)鍵點(diǎn)檢測(cè)實(shí)例

person_kp=PersonKeyPointApp(kmodel_path,model_input_size=[320,320],confidence_threshold=confidence_threshold,nms_threshold=nms_threshold,rgb888p_size=rgb888p_size,display_size=display_size,debug_mode=0)

person_kp.config_preprocess()

whileTrue:

withScopedTiming("total",1):

# 獲取當(dāng)前幀數(shù)據(jù)

img=pl.get_frame()

# 推理當(dāng)前幀

res=person_kp.run(img)

# 繪制結(jié)果到PipeLine的osd圖像

person_kp.draw_result(pl,res)

# 顯示當(dāng)前的繪制結(jié)果

pl.show_image()

gc.collect()

person_kp.deinit()

pl.destroy()

連接開(kāi)發(fā)板并運(yùn)行代碼;

將攝像頭對(duì)準(zhǔn)目標(biāo)檢測(cè)畫(huà)面,即可在 IDE 獲取實(shí)時(shí)識(shí)別效果。

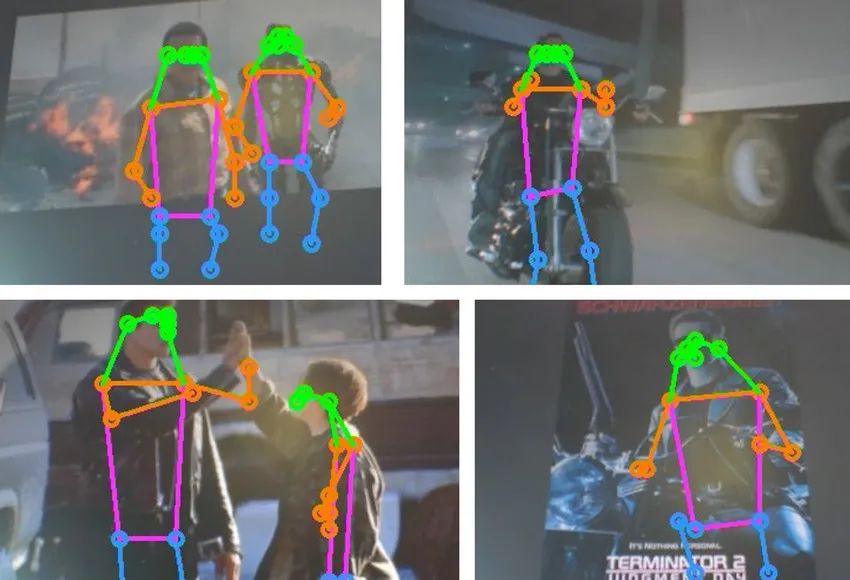

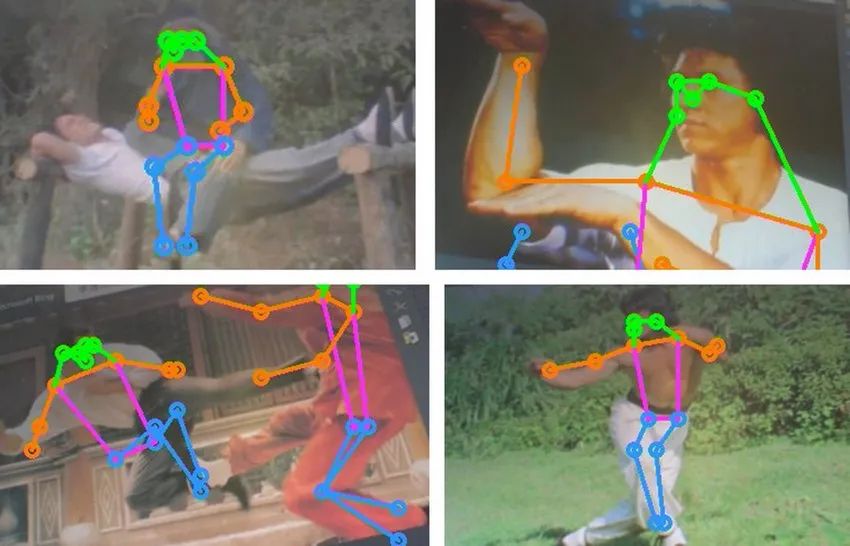

效果

運(yùn)動(dòng)場(chǎng)景

科幻電影

動(dòng)作電影

動(dòng)態(tài)識(shí)別效果詳見(jiàn)頂部和底部視頻。

總結(jié)

本文介紹了香蕉派 CanMV K230D Zero 開(kāi)發(fā)板通過(guò)攝像頭實(shí)現(xiàn)人體關(guān)鍵點(diǎn)的實(shí)時(shí)動(dòng)態(tài)檢測(cè)和識(shí)別的項(xiàng)目設(shè)計(jì),為相關(guān)產(chǎn)品的快速開(kāi)發(fā)和產(chǎn)品設(shè)計(jì)提供了參考。

-

攝像頭

+關(guān)注

關(guān)注

61文章

4973瀏覽量

98272 -

開(kāi)發(fā)板

+關(guān)注

關(guān)注

25文章

5661瀏覽量

104458 -

動(dòng)態(tài)識(shí)別模塊

+關(guān)注

關(guān)注

0文章

2瀏覽量

870

發(fā)布評(píng)論請(qǐng)先 登錄

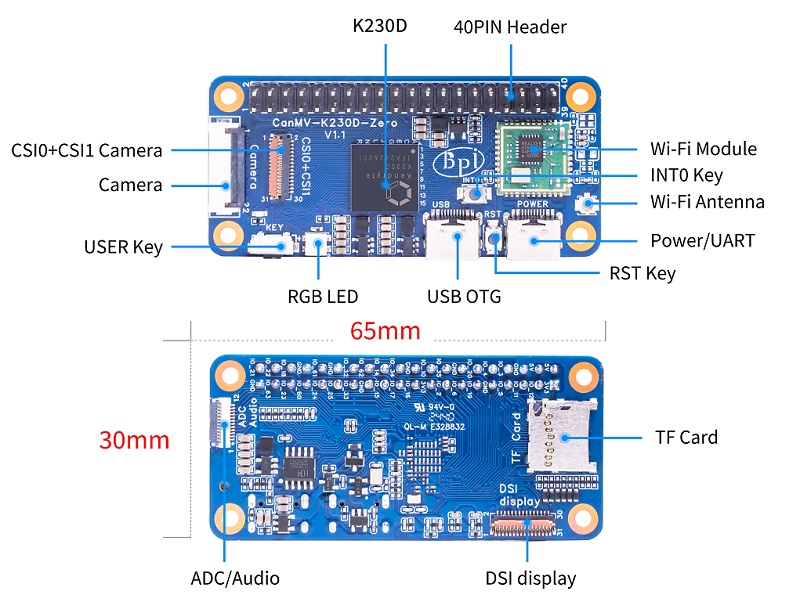

香蕉派 BPI-CanMV-K230D-Zero 采用嘉楠科技 K230D RISC-V芯片設(shè)計(jì)

香蕉派開(kāi)發(fā)板BPI-CanMV-K230D-Zero 嘉楠科技 RISC-V開(kāi)發(fā)板公開(kāi)發(fā)售

Banana Pi BPI-CanMV-K230D-Zero :AIoT 應(yīng)用的 Kendryte K230D RISC-V

【BPI-CanMV-K230D-Zero開(kāi)發(fā)板體驗(yàn)】香蕉派 K230D 視覺(jué)開(kāi)發(fā)板開(kāi)箱+CamMV 環(huán)境搭建

【BPI-CanMV-K230D-Zero開(kāi)發(fā)板體驗(yàn)】介紹、系統(tǒng)安裝、工程測(cè)試

【BPI-CanMV-K230D-Zero開(kāi)發(fā)板體驗(yàn)】+初品嘉楠科技產(chǎn)品

【BPI-CanMV-K230D-Zero開(kāi)發(fā)板體驗(yàn)】01_開(kāi)箱+環(huán)境配置+系統(tǒng)燒錄

【BPI-CanMV-K230D-Zero開(kāi)發(fā)板體驗(yàn)】+燈效控制與Python編程

【BPI-CanMV-K230D-Zero開(kāi)發(fā)板體驗(yàn)】人體關(guān)鍵點(diǎn)檢測(cè)

【BPI-CanMV-K230D-Zero開(kāi)發(fā)板體驗(yàn)】+ADC數(shù)據(jù)采集及尋找引腳的問(wèn)題

【BPI-CanMV-K230D-Zero開(kāi)發(fā)板體驗(yàn)】+串口通訊及應(yīng)用

【BPI-CanMV-K230D-Zero開(kāi)發(fā)板體驗(yàn)】+TFT屏顯示驅(qū)動(dòng)及信息顯示

Banana Pi BPI-CanMV-K230D-Zero 采用嘉楠科技 K230D RISC-V芯片設(shè)計(jì)

香蕉派開(kāi)發(fā)板BPI-CanMV-K230D-Zero 嘉楠科技 RISC-V開(kāi)發(fā)板公開(kāi)發(fā)售

搭載雙核玄鐵C908 ?RISC-V CPU,BPI-CanMV-K230D-Zero開(kāi)發(fā)板試用

【開(kāi)發(fā)實(shí)例】基于BPI-CanMV-K230D-Zero開(kāi)發(fā)板實(shí)現(xiàn)人體關(guān)鍵點(diǎn)的實(shí)時(shí)動(dòng)態(tài)識(shí)別

【開(kāi)發(fā)實(shí)例】基于BPI-CanMV-K230D-Zero開(kāi)發(fā)板實(shí)現(xiàn)人體關(guān)鍵點(diǎn)的實(shí)時(shí)動(dòng)態(tài)識(shí)別

評(píng)論