本文內容來自先楫開發者 @Xusiwei1236,介紹了如何在HPM6750上運行邊緣AI框架,感興趣的小伙伴快點來看看

--------------- 以下為測評內容---------------

TFLM是什么?

你或許都聽說過TensorFlow——由谷歌開發并開源的一個機器學習庫,它支持模型訓練和模型推理。

今天介紹的TFLM,全稱是TensorFlow Lite for Microcontrollers,翻譯過來就是“針對微控制器的TensorFlow Lite”。那TensorFlow Lite又是什么呢?

TensorFlow Lite(通常簡稱TFLite)其實是TensorFlow團隊為了將模型部署到移動設備而開發的一套解決方案,通俗的說就是手機版的TensorFlow。下面是TensorFlow官網上關于TFLite的一段介紹:

“TensorFlow Lite 是一組工具,可幫助開發者在移動設備、嵌入式設備和 loT 設備上運行模型,以便實現設備端機器學習。”

而我們今天要介紹的TensorFlow Lite for Microcontrollers(TFLM)則是 TensorFlow Lite的微控制器版本。這里是官網上的一段介紹:

“ TensorFlow Lite for Microcontrollers (以下簡稱TFLM)是 TensorFlow Lite 的一個實驗性移植版本,它適用于微控制器和其他一些僅有數千字節內存的設備。它可以直接在“裸機”上運行,不需要操作系統支持、任何標準 C/C++ 庫和動態內存分配。核心運行時(core runtime)在 Cortex M3 上運行時僅需 16KB,加上足以用來運行語音關鍵字檢測模型的操作,也只需 22KB 的空間。”

這三者一脈相承,都出自谷歌,區別是TensorFlow同時支持訓練和推理,而后兩者只支持推理。TFLite主要用于支持手機、平板等移動設備,TFLM則可以支持單片機。從發展歷程上來說,后兩者都是TensorFlow項目的“支線項目”。或者說這三者是一個樹形的發展過程,具體來說,TFLite是從TensorFlow項目分裂出來的,TFLite-Micro是從TFLite分裂出來的,目前是三個并行發展的。在很長一段時間內,這三個項目的源碼都在一個代碼倉中維護,從源碼目錄的包含關系上來說,TensorFlow包含后兩者,TFLite包含tflite-micro。

HPMSDK中的TFLM

- TFLM中間件

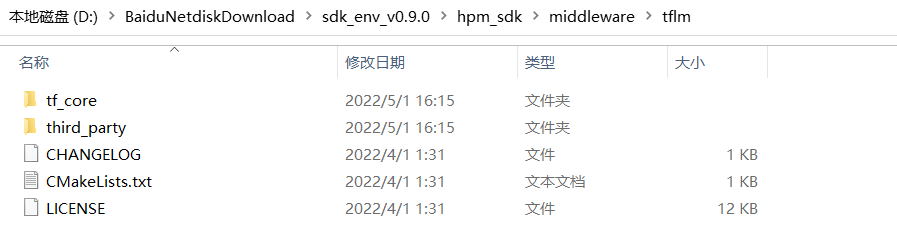

HPM SDK中集成了TFLM中間件(類似庫,但是沒有單獨編譯為庫),位于hpm_sdk\middleware子目錄:

這個子目錄的代碼是由TFLM開源項目裁剪而來,刪除了很多不需要的文件。

這個子目錄的代碼是由TFLM開源項目裁剪而來,刪除了很多不需要的文件。

TFLM示例

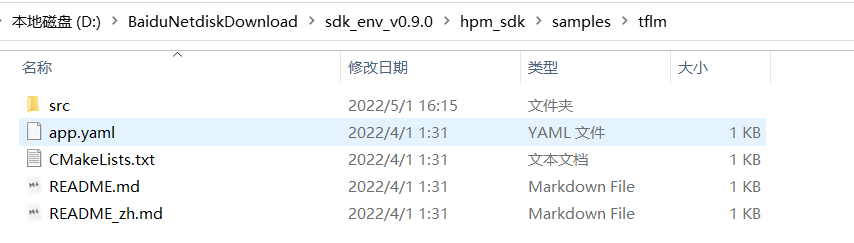

HPM SDK中也提供了TFLM示例,位于hpm_sdk\samples\tflm子目錄:

示例代碼是從官方的persion_detection示例修改而來,添加了攝像頭采集圖像和LCD顯示結果。

由于我手里沒有配套的攝像頭和顯示屏,所以本篇沒有以這個示例作為實驗。

在HPM6750上運行TFLM基準測試

接下來以person detection benchmark為例,講解如何在HPM6750上運行TFLM基準測試。

將person detection benchmark源代碼添加到HPM SDK環境

按照如下步驟,在HPM SDK環境中添加person detection benchmark源代碼文件:

在HPM SDK的samples子目錄創建tflm_person_detect_benchmark目錄,并在其中創建src目錄;

從上文描述的已經運行過person detection benchmark的tflite-micro目錄中拷貝如下文件到src目錄:

tensorflow\lite\micro\benchmarks\person_detection_benchmark.cc

tensorflow\lite\micro\benchmarks\micro_benchmark.h

tensorflow\lite\micro\examples\person_detection\model_settings.h

tensorflow\lite\micro\examples\person_detection\model_settings.cc

在src目錄創建testdata子目錄,并將tflite-micro目錄下如下目錄中的文件拷貝全部到testdata中:

tensorflow\lite\micro\tools\make\gen\linux_x86_64_default\genfiles\tensorflow\lite\micro\examples\person_detection\testdata

修改person_detection_benchmark.cc、model_settings.cc、no_person_image_data.cc、person_image_data.cc 文件中部分#include預處理指令的文件路徑(根據拷貝后的相對路徑修改);

person_detection_benchmark.cc文件中,main函數的一開始添加一行board_init();、頂部添加一行#include "board.h”

添加CMakeLists.txt和app.yaml文件

在src平級創建CMakeLists.txt文件,內容如下:

cmake_minimum_required(VERSION 3.13)

set(CONFIG_TFLM 1)

find_package(hpm-sdk REQUIRED HINTS $ENV{HPM_SDK_BASE})project(tflm_person_detect_benchmark)set(CMAKE_CXX_STANDARD 11)

sdk_app_src(src/model_settings.cc)sdk_app_src(src/person_detection_benchmark.cc)sdk_app_src(src/testdata/no_person_image_data.cc)sdk_app_src(src/testdata/person_image_data.cc)

sdk_app_inc(src)sdk_ld_options("-lm")sdk_ld_options("--std=c++11")sdk_compile_definitions(__HPMICRO__)sdk_compile_definitions(-DINIT_EXT_RAM_FOR_DATA=1)# sdk_compile_options("-mabi=ilp32f")# sdk_compile_options("-march=rv32imafc")sdk_compile_options("-O2")# sdk_compile_options("-O3")set(SEGGER_LEVEL_O3 1)generate_ses_project()在src平級創建app.yaml文件,內容如下:

dependency: - tflm- 編譯和運行TFLM基準測試

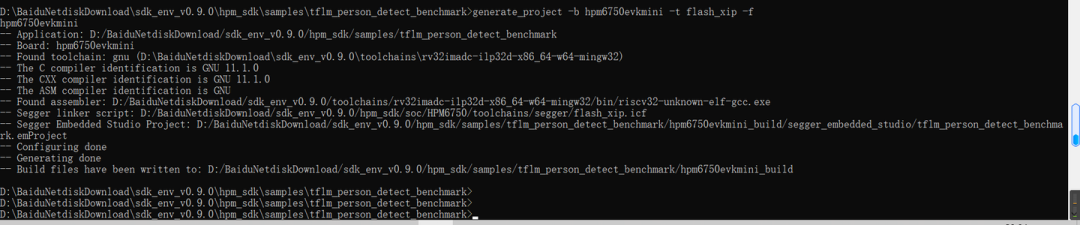

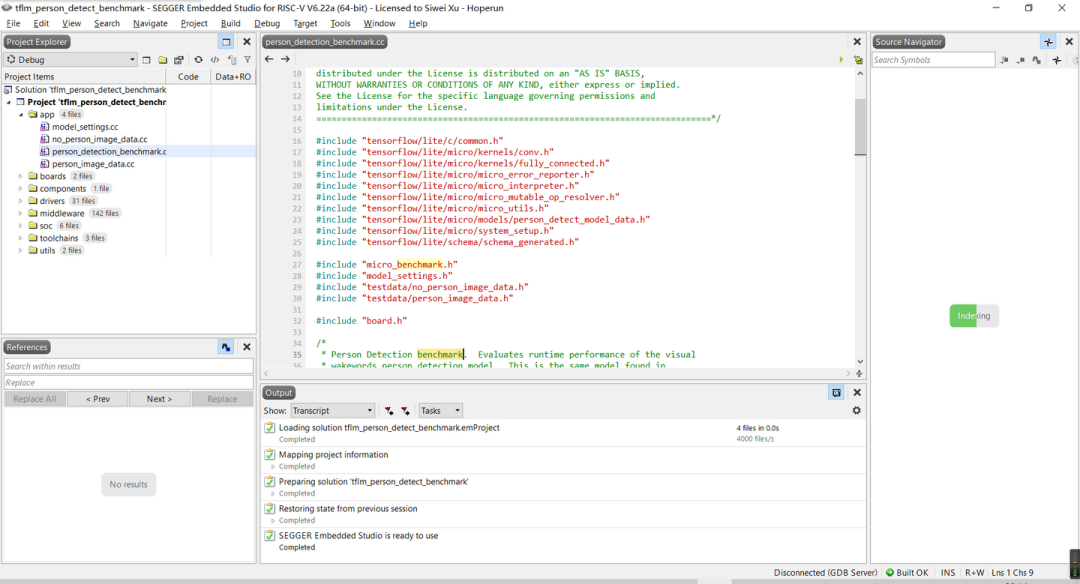

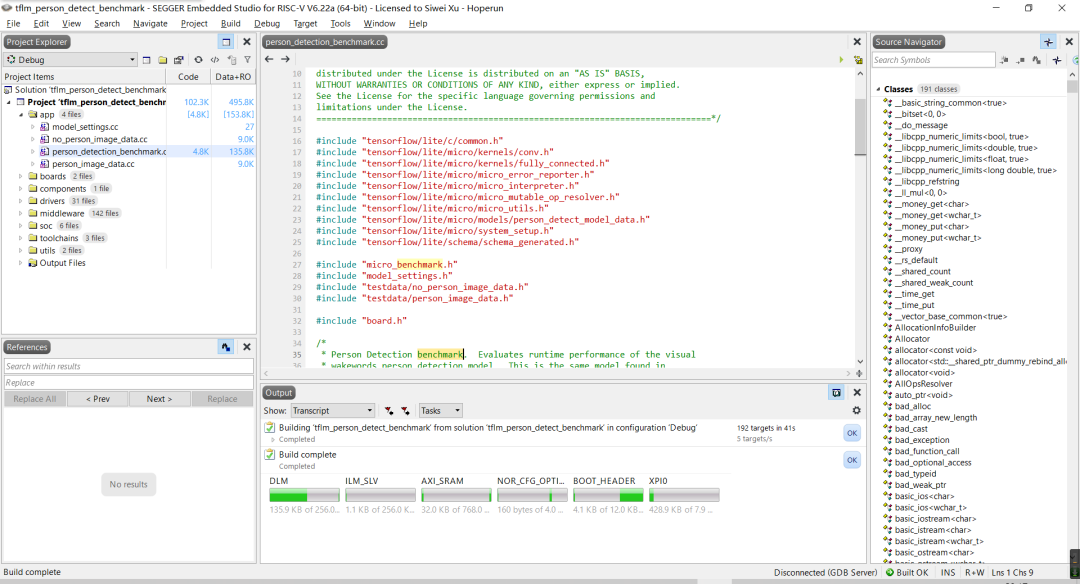

接下來就是大家熟悉的——編譯運行了。首先,使用generate_project生產項目: 接著,將HPM6750開發板連接到PC,在Embedded Studio中打卡剛剛生產的項目:

接著,將HPM6750開發板連接到PC,在Embedded Studio中打卡剛剛生產的項目: 這個項目因為引入了TFLM的源碼,文件較多,所以右邊的源碼導航窗里面的Indexing要執行很久才能結束。

這個項目因為引入了TFLM的源碼,文件較多,所以右邊的源碼導航窗里面的Indexing要執行很久才能結束。

然后,就可以使用F7編譯、F5調試項目了:

編譯完成后,先打卡串口終端連接到設備串口,波特率115200。啟動調試后,直接繼續運行,就可以在串口終端中看到基準測試的輸出了:

============================== hpm6750evkmini clock summary==============================cpu0: 816000000Hzcpu1: 816000000Hzaxi0: 200000000Hzaxi1: 200000000Hzaxi2: 200000000Hzahb: 200000000Hzmchtmr0: 24000000Hzmchtmr1: 1000000Hzxpi0: 133333333Hzxpi1: 400000000Hzdram: 166666666Hzdisplay: 74250000Hzcam0: 59400000Hzcam1: 59400000Hzjpeg: 200000000Hzpdma: 200000000Hz==============================

----------------------------------------------------------------------$$\ $$\ $$$$$$$\ $$\ $$\ $$\$$ | $$ |$$ __$$\ $$$\ $$$ |\__|$$ | $$ |$$ | $$ |$$$$\ $$$$ |$$\ $$$$$$$\ $$$$$$\ $$$$$$\$$$$$$$$ |$$$$$$$ |$$\$$\$$ $$ |$$ |$$ _____|$$ __$$\ $$ __$$\$$ __$$ |$$ ____/ $$ \$$$ $$ |$$ |$$ / $$ | \__|$$ / $$ |$$ | $$ |$$ | $$ |\$ /$$ |$$ |$$ | $$ | $$ | $$ |$$ | $$ |$$ | $$ | \_/ $$ |$$ |\$$$$$$$\ $$ | \$$$$$$ |\__| \__|\__| \__| \__|\__| \_______|\__| \______/----------------------------------------------------------------------InitializeBenchmarkRunner took 114969 ticks (4 ms).

WithPersonDataIterations(1) took 10694521 ticks (445 ms)DEPTHWISE_CONV_2D took 275798 ticks (11 ms).DEPTHWISE_CONV_2D took 280579 ticks (11 ms).CONV_2D took 516051 ticks (21 ms).DEPTHWISE_CONV_2D took 139000 ticks (5 ms).CONV_2D took 459646 ticks (19 ms).DEPTHWISE_CONV_2D took 274903 ticks (11 ms).CONV_2D took 868518 ticks (36 ms).DEPTHWISE_CONV_2D took 68180 ticks (2 ms).CONV_2D took 434392 ticks (18 ms).DEPTHWISE_CONV_2D took 132918 ticks (5 ms).CONV_2D took 843014 ticks (35 ms).DEPTHWISE_CONV_2D took 33228 ticks (1 ms).CONV_2D took 423288 ticks (17 ms).DEPTHWISE_CONV_2D took 62040 ticks (2 ms).CONV_2D took 833033 ticks (34 ms).DEPTHWISE_CONV_2D took 62198 ticks (2 ms).CONV_2D took 834644 ticks (34 ms).DEPTHWISE_CONV_2D took 62176 ticks (2 ms).CONV_2D took 838212 ticks (34 ms).DEPTHWISE_CONV_2D took 62206 ticks (2 ms).CONV_2D took 832857 ticks (34 ms).DEPTHWISE_CONV_2D took 62194 ticks (2 ms).CONV_2D took 832882 ticks (34 ms).DEPTHWISE_CONV_2D took 16050 ticks (0 ms).CONV_2D took 438774 ticks (18 ms).DEPTHWISE_CONV_2D took 27494 ticks (1 ms).CONV_2D took 974362 ticks (40 ms).AVERAGE_POOL_2D took 2323 ticks (0 ms).CONV_2D took 1128 ticks (0 ms).RESHAPE took 184 ticks (0 ms).SOFTMAX took 2249 ticks (0 ms).

NoPersonDataIterations(1) took 10694160 ticks (445 ms)DEPTHWISE_CONV_2D took 274922 ticks (11 ms).DEPTHWISE_CONV_2D took 281095 ticks (11 ms).CONV_2D took 515380 ticks (21 ms).DEPTHWISE_CONV_2D took 139428 ticks (5 ms).CONV_2D took 460039 ticks (19 ms).DEPTHWISE_CONV_2D took 275255 ticks (11 ms).CONV_2D took 868787 ticks (36 ms).DEPTHWISE_CONV_2D took 68384 ticks (2 ms).CONV_2D took 434537 ticks (18 ms).DEPTHWISE_CONV_2D took 133071 ticks (5 ms).CONV_2D took 843202 ticks (35 ms).DEPTHWISE_CONV_2D took 33291 ticks (1 ms).CONV_2D took 423388 ticks (17 ms).DEPTHWISE_CONV_2D took 62190 ticks (2 ms).CONV_2D took 832978 ticks (34 ms).DEPTHWISE_CONV_2D took 62205 ticks (2 ms).CONV_2D took 834636 ticks (34 ms).DEPTHWISE_CONV_2D took 62213 ticks (2 ms).CONV_2D took 838212 ticks (34 ms).DEPTHWISE_CONV_2D took 62239 ticks (2 ms).CONV_2D took 832850 ticks (34 ms).DEPTHWISE_CONV_2D took 62217 ticks (2 ms).CONV_2D took 832856 ticks (34 ms).DEPTHWISE_CONV_2D took 16040 ticks (0 ms).CONV_2D took 438779 ticks (18 ms).DEPTHWISE_CONV_2D took 27481 ticks (1 ms).CONV_2D took 974354 ticks (40 ms).AVERAGE_POOL_2D took 1812 ticks (0 ms).CONV_2D took 1077 ticks (0 ms).RESHAPE took 341 ticks (0 ms).SOFTMAX took 901 ticks (0 ms).

WithPersonDataIterations(10) took 106960312 ticks (4456 ms)

NoPersonDataIterations(10) took 106964554 ticks (4456 ms)可以看到,在HPM6750EVKMINI開發板上,連續運行10次人像檢測模型,總體耗時4456毫秒,每次平均耗時445.6毫秒。

在樹莓派3B+上運行TFLM基準測試

在樹莓派上運行TFLM基準測試

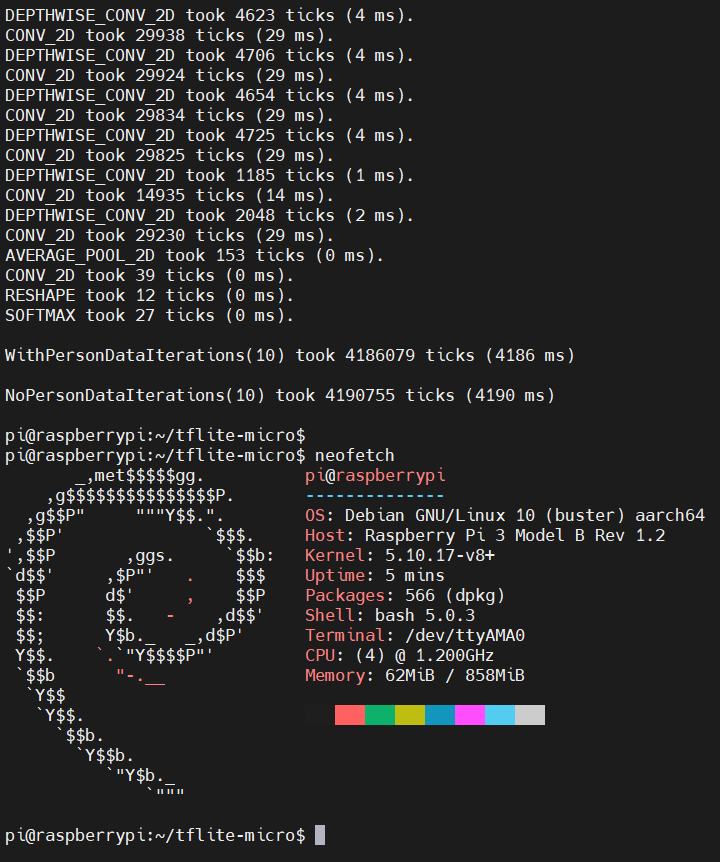

樹莓派3B+上可以和PC上類似,直接運行PC端的測試命令,得到基準測試結果:

可以看到,在樹莓派3B+上的,對于有人臉的圖片,連續運行10次人臉檢測模型,總體耗時4186毫秒,每次平均耗時418.6毫秒;對于無人臉的圖片,連續運行10次人臉檢測模型,耗時4190毫秒,每次平均耗時419毫秒。

HPM6750和樹莓派3B+、AMD R7 4800H上的基準測試結果對比

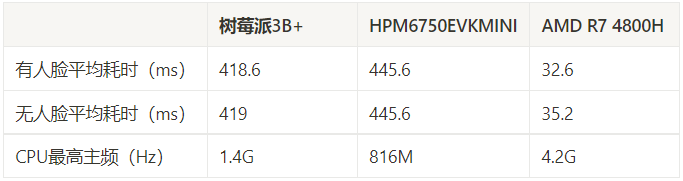

這里將HPM6750EVKMINI開發板、樹莓派3B+和AMD R7 4800H上運行人臉檢測模型的平均耗時結果匯總如下:

可以看到,在TFLM人臉檢測模型計算場景下,HPM6750EVKMINI和樹莓派3B+成績相當。雖然HPM6750的816MHz CPU頻率比樹莓派3B+搭載的BCM2837 Cortex-A53 1.4GHz的主頻低,但是在單核心計算能力上沒有相差太多。

這里樹莓派3B+上的TFLM基準測試程序是運行在64位Debian Linux發行版上的,而HPM6750上的測試程序是直接運行在裸機上的。由于操作系統內核中任務調度器的存在,會對CPU的計算能力帶來一定損耗。所以,這里進行的并不是一個嚴格意義上的對比測試,測試結果僅供參考。

(本文參考鏈接:http://m.eeworld.com.cn/bbs_thread-1208270-1-1.html)

-

AI

+關注

關注

88文章

35093瀏覽量

279456

發布評論請先 登錄

Nordic收購 Neuton.AI 關于產品技術的分析

重磅更新 | 先楫半導體HPM_SDK v1.9.0 發布

AI賦能邊緣網關:開啟智能時代的新藍海

高速鏈路設計難?利用HPM6750雙千兆以太網透傳實現LED大屏實時控制

測評分享 | 首嘗HPM6750運行邊緣AI框架(含TFLM基準測試)

測評分享 | 首嘗HPM6750運行邊緣AI框架(含TFLM基準測試)

評論