通俗理解文本生成的常用解碼策略

General Understanding of Decoding Strategies Commonly Used in Text Generation

”

注意:這一篇文章只包含了常用的解碼策略,未包含更新的研究成果。Note: this post contains only commonly used decoding strategies and does not include more recent research findings.

目錄:

- 背景簡(jiǎn)介

- 解決的問(wèn)題

- 解碼策略

- Standard Greedy Search

- Beam Search

- Sampling

- Top-k Sampling

- Sampling with Temperature

- Top-p (Nucleus) Sampling

- 代碼快覽

- 總結(jié)

This post covers:

- Background

- Problem

- Decoding Strategies

- Standard Greedy Search

- Beam Search

- Sampling

- Top-k Sampling

- Sampling with Temperature

- Top-p (Nucleus) Sampling

- Code Tips

- Summary

1. 背景簡(jiǎn)介(Background)

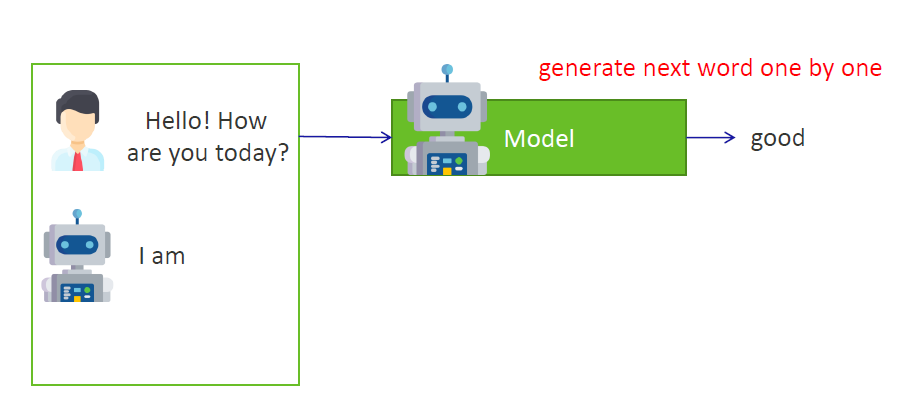

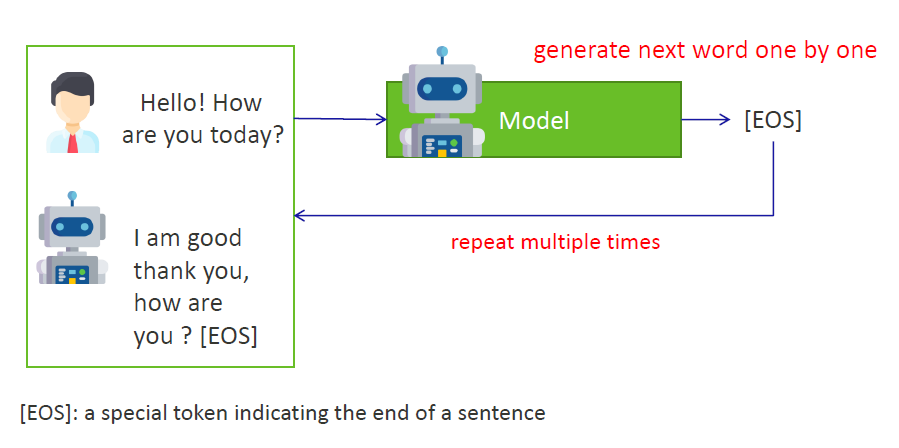

“Autoregressive”語(yǔ)言模型的含義是:當(dāng)生成文本時(shí),它不是一下子同時(shí)生成一段文字(模型吐出來(lái)好幾個(gè)字),而是一個(gè)字一個(gè)字的去生成。"Autoregressive" means that when a model generates text, it does not generate all the words in the text at once, but word by word.

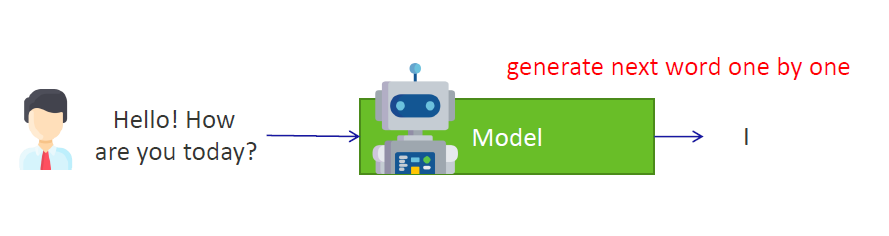

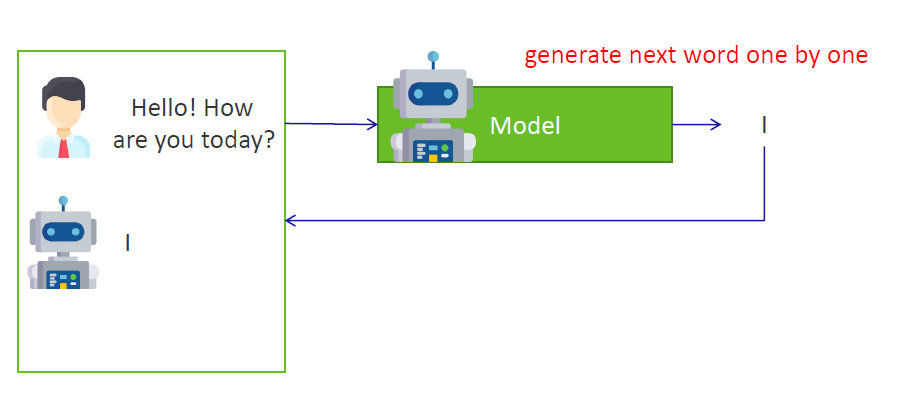

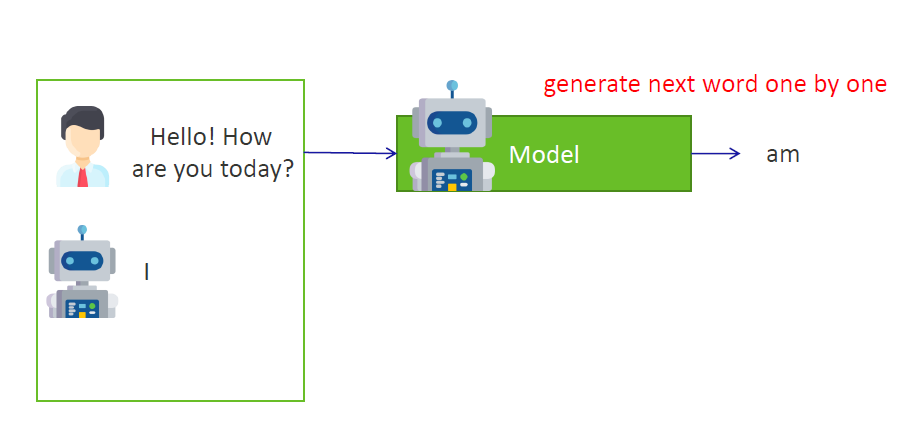

舉例來(lái)說(shuō),圖中(For example as shown in the figure):

1)小伙問(wèn)了模型一個(gè)問(wèn)題 (he asked the modal a question):Hello! How are you today?

2)模型需要生成回復(fù)文本,它生成的第一個(gè)單詞是(the first word in the response generated by the model is): “I”

3)當(dāng)生成“I”之后,模型根據(jù)目前得到的信息(小伙的問(wèn)題 + “I”),繼續(xù)生成下一個(gè)單詞 (Once the "I" has been generated, the model continues to generate the next word based on the information, the question + "I", it has received so far): “am”

4)當(dāng)生成“I am”之后,模型根據(jù)目前得到的信息(小伙的問(wèn)題 + “I am”),繼續(xù)生成下一個(gè)單詞(Once "I am" is generated, the model continues to generate the next word based on the information, the question + "I am", it has received so far): “good”

5)重復(fù)上面的步驟,直到生成一種特殊的詞之后,表示這一次的文本生成可以停止了。在例子中,當(dāng)生成“[EOS]”時(shí)表示這段文本生成完畢,(EOS = End of Sentence)。Repeat the above steps until a particular word is generated, which means that the text generation can be stopped for this time. In the example, the text is complete when the word "[EOS]" is generated.

2. 解決的問(wèn)題(Problem)

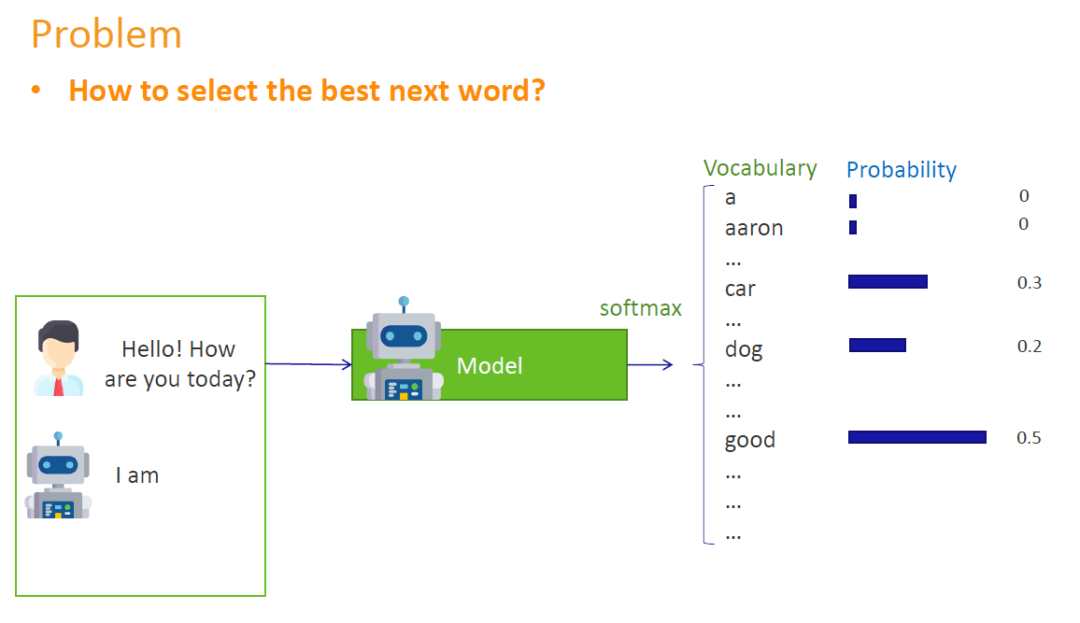

由于這種模型生成文本的方式是一個(gè)詞一個(gè)詞的輸出,所以它是否能夠產(chǎn)生好的文本取決于我們是否能夠聰明地決定下一步應(yīng)該輸出哪一個(gè)詞匯。Since this way of generating text is output word by word, its ability to produce goodtext depends on the text generation strategy being smart enough to decide which word should be output at each step.

“需要注意的是,在這篇文章中,對(duì)“好”的定義并不是指的這個(gè)模型在經(jīng)過(guò)良好的訓(xùn)練之后,具備了接近人類并且高質(zhì)量的表達(dá)能力。這里對(duì)“好”的定義是一個(gè)好的挑選輸出詞的策略。詳細(xì)來(lái)說(shuō)就是,在模型預(yù)測(cè)下一個(gè)詞應(yīng)該是什么的時(shí)候,它在任何狀態(tài)下(也就是說(shuō)不管模型是否經(jīng)過(guò)了良好的訓(xùn)練),這個(gè)策略總是有一套自己的辦法去盡全力挑選出來(lái)一個(gè)它認(rèn)為最合理的詞作為輸出。It is important to note that the definition of 'good' in this post does not mean that the model is well-trained and has a high quality of expression close to that of a human. In this context, the definition of 'good' is a good strategy for selecting output words. In detail, this means that when the model is predicting what the next word should be, in any status (i.e. whether the model is well-trained or not), the strategy always have a way of doing its best to pick the word that the startegy think makes the most sense as an output.

”

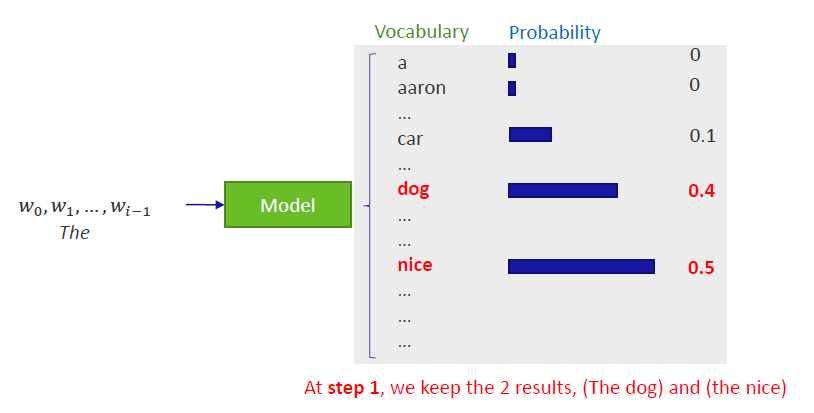

當(dāng)選詞策略將要對(duì)下一個(gè)輸出詞做選擇的時(shí)候,在我們面前有一張巨大的表格。這張表格便是模型目前認(rèn)為下一步應(yīng)該輸出哪一個(gè)詞的概率。The word selection strategy refers to a large table when making decisions about what words to output. This table stores the probability of what the model currently thinks the next word should be.

3. 解碼策略(Decoding Strategies)

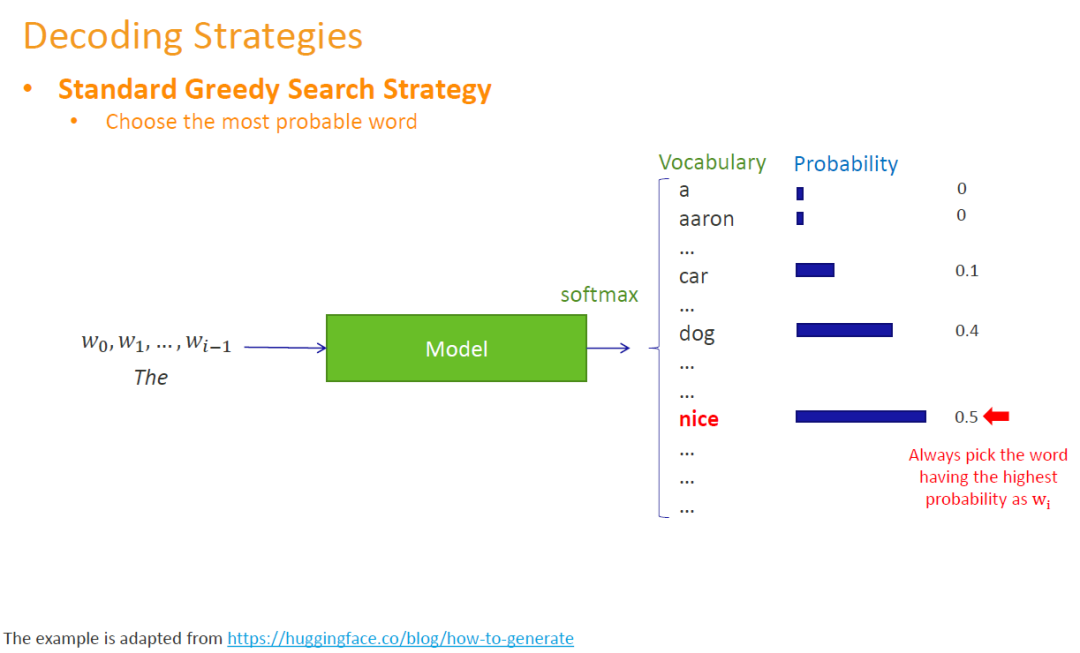

3.1 Standard Greedy Search

最簡(jiǎn)單樸素的方法是我們可以總是可以去挑選概率最高的詞去輸出。但是這樣的方法會(huì)有一個(gè)潛在的問(wèn)題,當(dāng)整個(gè)句子(多個(gè)詞匯)輸出完畢的時(shí)候,我們不能保證這整個(gè)句子就是最好的。我們也有可能找到比它更好的句子。雖然我們?cè)诿恳徊捷敵龅臅r(shí)候,選擇了我們目前認(rèn)為最好的選擇,但從長(zhǎng)遠(yuǎn)全局來(lái)看,這并不代表這些詞組合出來(lái)的整個(gè)句子就是最好的。The simplest and most straightforward way is that we can always pick the word with the highest probability. But there will be a potential problem, when we get the whole sentence, we can't guarantee that the sentence is good. It is also possible that we will find a sentence that is better than it is. Although we choose what we think are the best words at each step, in the big picture this does not mean that the whole sentence resulting from the combination of these words is good.

最簡(jiǎn)單樸素的方法是我們可以總是可以去挑選概率最高的詞去輸出。但是這樣的方法會(huì)有一個(gè)潛在的問(wèn)題,當(dāng)整個(gè)句子(多個(gè)詞匯)輸出完畢的時(shí)候,我們不能保證這整個(gè)句子就是最好的。我們也有可能找到比它更好的句子。雖然我們?cè)诿恳徊捷敵龅臅r(shí)候,選擇了我們目前認(rèn)為最好的選擇,但從長(zhǎng)遠(yuǎn)全局來(lái)看,這并不代表這些詞組合出來(lái)的整個(gè)句子就是最好的。The simplest and most straightforward way is that we can always pick the word with the highest probability. But there will be a potential problem, when we get the whole sentence, we can't guarantee that the sentence is good. It is also possible that we will find a sentence that is better than it is. Although we choose what we think are the best words at each step, in the big picture this does not mean that the whole sentence resulting from the combination of these words is good.

3.2 Beam Search

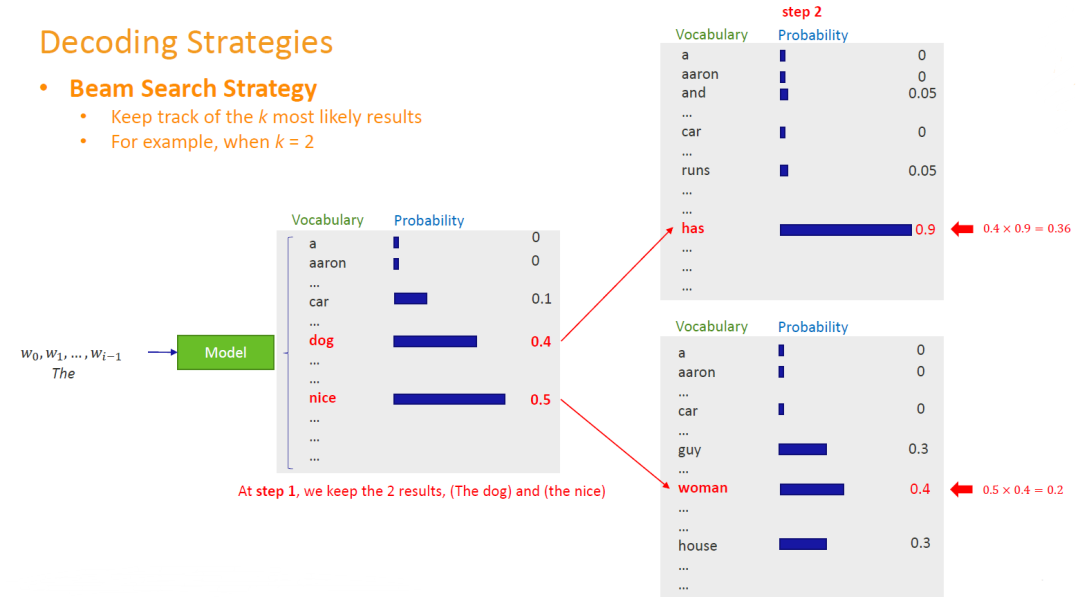

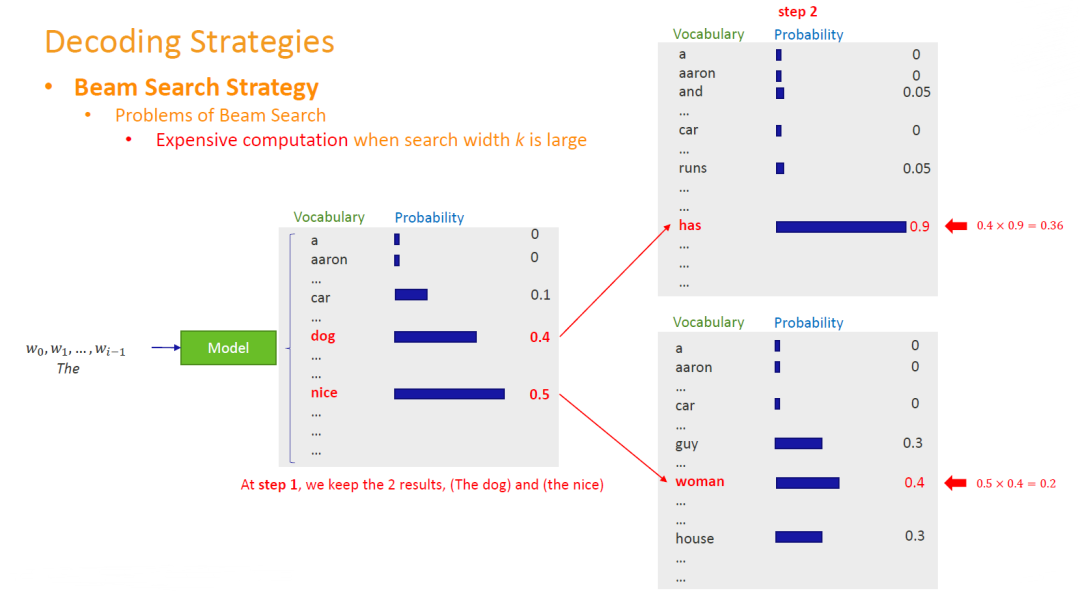

為了解決“大局觀”這個(gè)問(wèn)題,我們可以嘗試Beam搜索這個(gè)策略。舉個(gè)例子,如圖所示(To solve the "big picture" problem, we can try the Beam search strategy. An example is shown in the figure): 在3.1的策略中,我們的目光比較狹窄,因?yàn)槲覀冎粫?huì)關(guān)注我們認(rèn)為最好的那1個(gè)輸出。而在Beam搜索策略中,我們可以關(guān)注更多的“選手”(在圖中我們關(guān)注了2個(gè))。前期表現(xiàn)好的選手到最后不一定是最好的。如果你愿意的話,也可以同時(shí)關(guān)注更多的“選手”,只不過(guò)這樣付出的代價(jià)就是你需要的運(yùn)算資源更多了。In the strategy mentioned in section 3.1, we have a narrow focus, as we only focus on the 1 prediction we think is the best. Whereas in the Beam search strategy, we can focus on more options (in the figure we focus on 2). The text fragments with the highest scores in the early stages are not necessarily the best in the end. You can also focus on more text fragments at the same time if you wish, but this requires more computing resources.

在3.1的策略中,我們的目光比較狹窄,因?yàn)槲覀冎粫?huì)關(guān)注我們認(rèn)為最好的那1個(gè)輸出。而在Beam搜索策略中,我們可以關(guān)注更多的“選手”(在圖中我們關(guān)注了2個(gè))。前期表現(xiàn)好的選手到最后不一定是最好的。如果你愿意的話,也可以同時(shí)關(guān)注更多的“選手”,只不過(guò)這樣付出的代價(jià)就是你需要的運(yùn)算資源更多了。In the strategy mentioned in section 3.1, we have a narrow focus, as we only focus on the 1 prediction we think is the best. Whereas in the Beam search strategy, we can focus on more options (in the figure we focus on 2). The text fragments with the highest scores in the early stages are not necessarily the best in the end. You can also focus on more text fragments at the same time if you wish, but this requires more computing resources.

1)在上圖中,當(dāng)前的輸入為“The” → Beam搜索策略需要根據(jù)輸出的概率表格選擇下一個(gè)輸出詞 → Beam選擇關(guān)注最好的2位“選手”,即"The dog"和"The nice",他們的得分分別為0.4與0.5。In the above figure, the current input is "The" → Beam search strategy selects the next word based on the output probability table → Beam focuses on the best 2 choices, "The dog" and "The nice", which have scores of 0.4 and 0.5 respectively.

2)現(xiàn)在,在上圖中,當(dāng)前的輸入為“The dog”和“The nice” → 當(dāng)前的策略為這2個(gè)輸入挑選出分?jǐn)?shù)最高的輸出→“The dog has”和“The nice woman”。Now, in the above figure, the current inputs are "The dog" and "The nice" → the current strategy picks the highest scoring output for these 2 inputs → "The dog has" and "The nice woman".

3)繼續(xù)按照上面的思路,一直執(zhí)行到最后你會(huì)得到2個(gè)得分最高的句子。Keep executing until the end and you will get the 2 highest-scoring sentences.

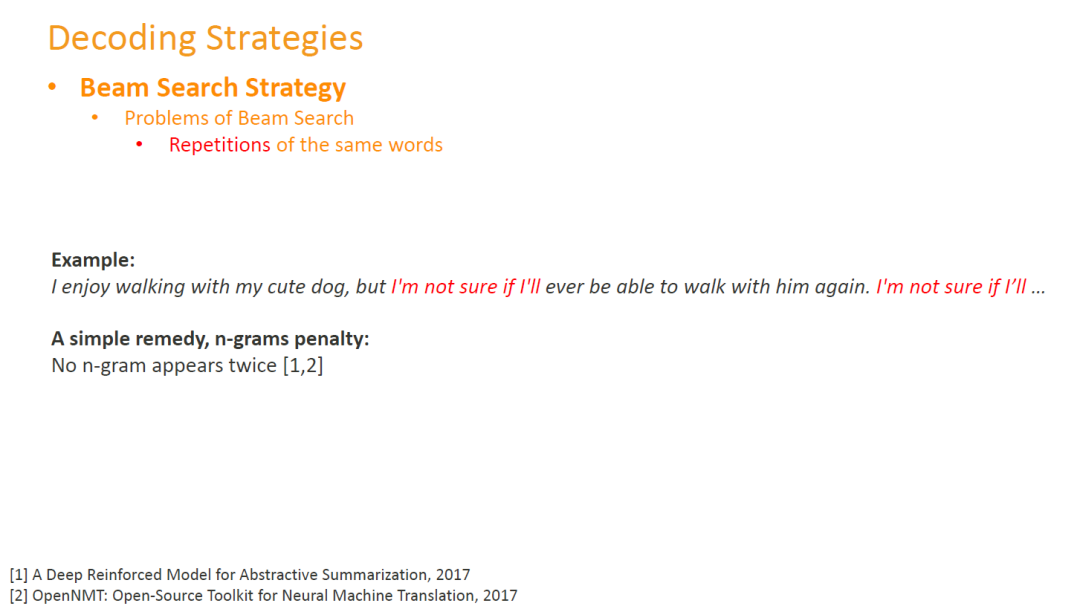

缺陷之一 (Shortcomings 1):但是這種策略生成的文本可能會(huì)有一個(gè)缺陷,容易重復(fù)的說(shuō)一些話(如下圖所示:“I'm not sure if I'll...”出現(xiàn)了2次)。一種補(bǔ)救辦法是用簡(jiǎn)單的規(guī)則來(lái)限制生成的文本,比如同一小段文本(n-gram)不能出現(xiàn)2次。However, the text generated by this strategy may have the drawback of being prone to saying something over and over again (as shown below: "I'm not sure if I'll..." which appears 2 times). One remedy is to restrict the generated text with simple rules, such as the same text fragment (n-gram) not appearing twice.

缺陷之二 (Shortcomings 2):當(dāng)我們想要的很大時(shí)(的含義是我們想要同時(shí)觀察個(gè)分?jǐn)?shù)最高的生成結(jié)果),相對(duì)應(yīng)地對(duì)運(yùn)算資源的需求也會(huì)變大。When we want to be large (in the sense that we want to observe thehighest scoring generated results simultaneously), the corresponding demand on computing resources becomes large.

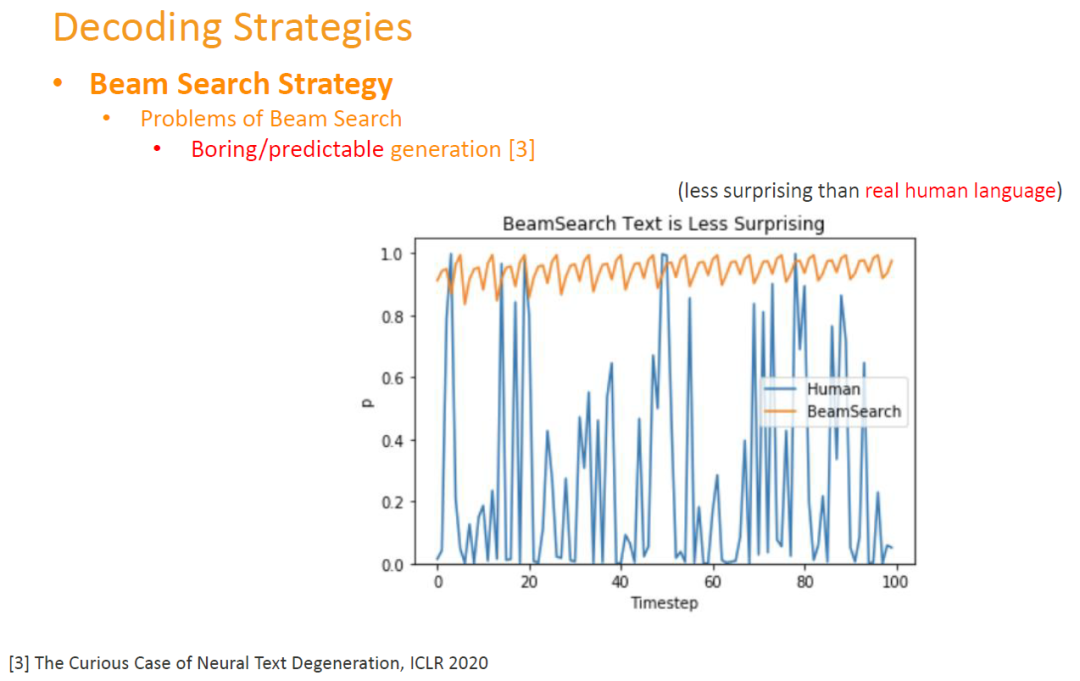

缺陷之三 (Shortcomings 3):模型生成的文本比較枯燥、無(wú)趣。經(jīng)過(guò)研究表明,在Beam搜索策略的引導(dǎo)下,模型雖然可以生成人類能夠理解的句子,但是這些句子并沒(méi)有給真正的人類帶來(lái)驚喜。The text generated by the model is rather boring and uninteresting. It has been shown that guided by the Beam search strategy, the model can generate sentences that humans can understand, but these sentences do not surprise real humans.

Beam搜索策略的變體 (More Variants of the Beam Search Strategy):

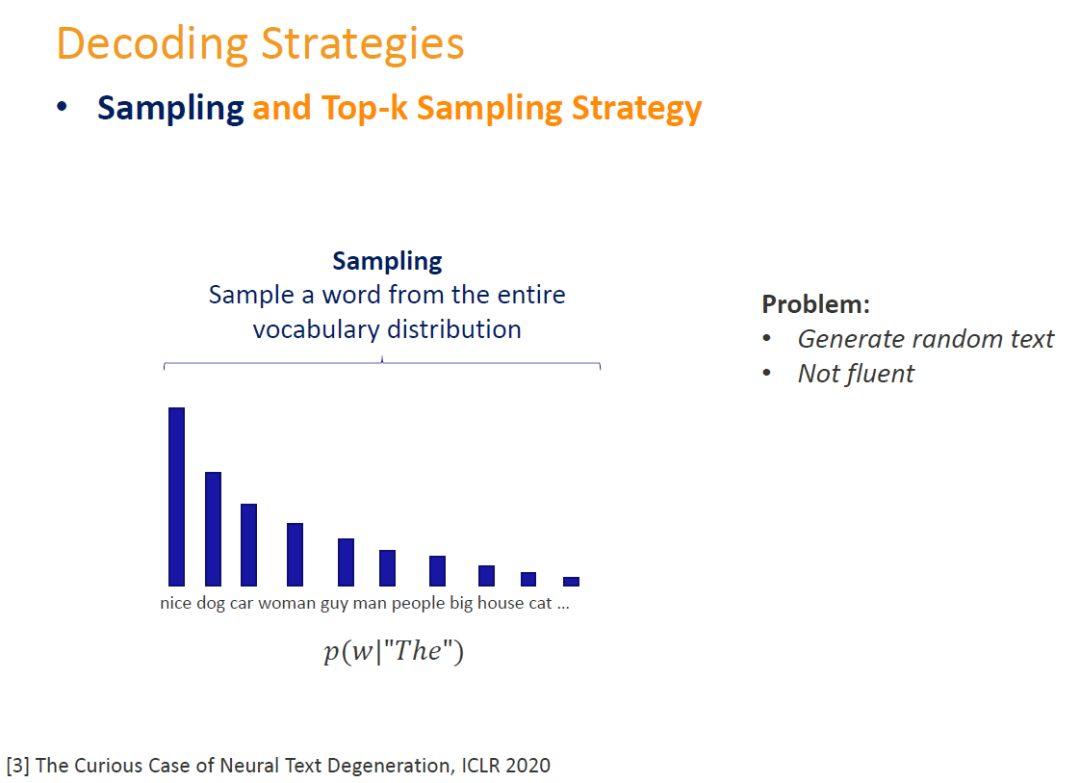

3.3 Sampling

使用采樣的方法可以讓生成的文本更加多樣化。很樸素的一種方法就是按照當(dāng)前的概率分布來(lái)進(jìn)行采樣。在模型的認(rèn)知中,它認(rèn)為合理的詞(也就是概率大的詞)就會(huì)有更大的幾率被采樣到。這種方法的缺陷是會(huì)有一定幾率亂說(shuō)話,或者生成的句子并不像人類話那般流利。The use of sampling allows for a greater variety of text to be generated. A very simple way of doing this is to sample according to the current probability distribution. Words with a high probability will have a higher chance of being sampled. The disadvantageof this approach is that there is a certain chance that incoherent words will be generated, or that the sentences generated will not be as fluent as in the language used by humans.

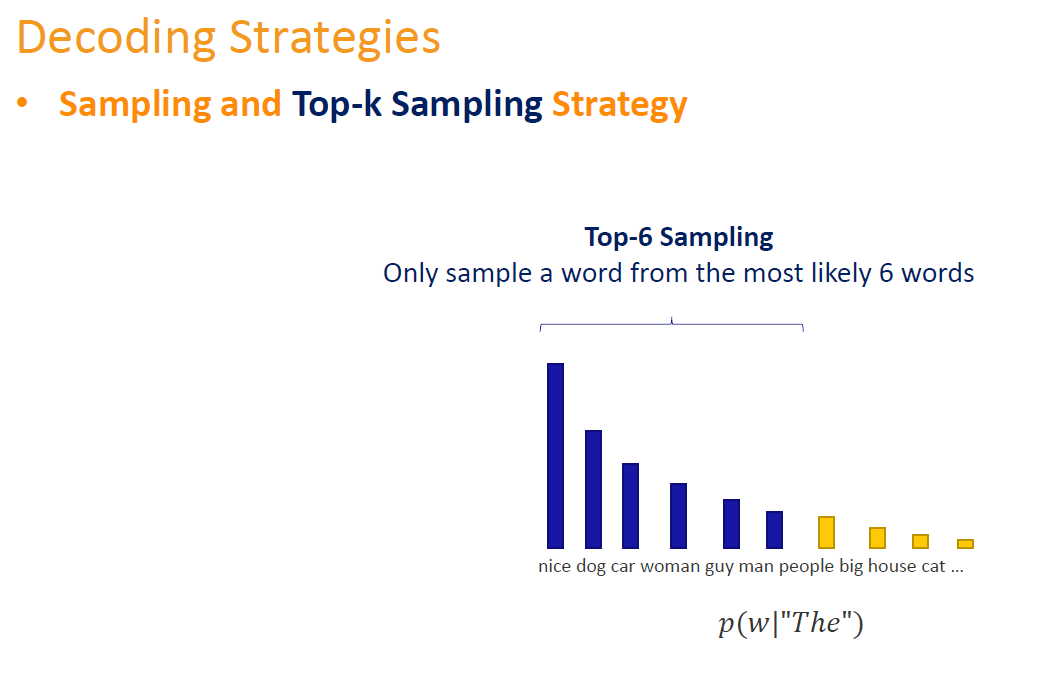

3.4 Top-k Sampling

為了緩解上述問(wèn)題,我們可以限制采樣的范圍。例如我們可以每次只在概率表中的排名前個(gè)詞中采樣。To alleviate the above problem, we can limit the scope of sampling. For example, we could sample only the top words in the probability table at a time.

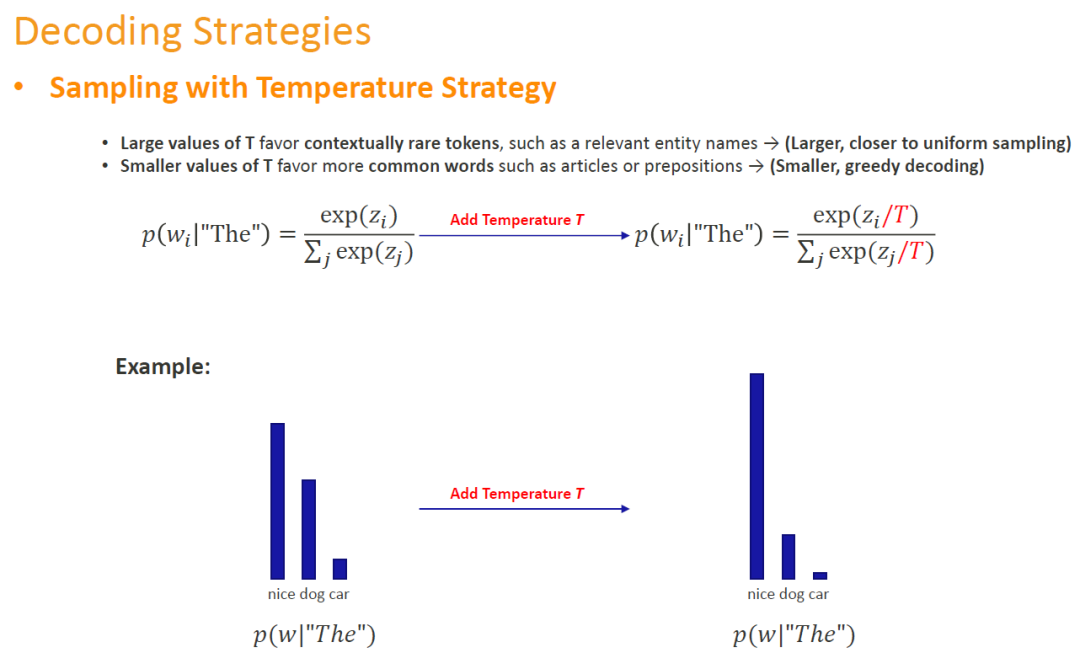

3.5 Sampling with Temperature

這種方法可以對(duì)當(dāng)前的概率分布進(jìn)行縮放,例如讓概率大的更大、讓小的變的更小,或者讓大概率和小概率之間差別沒(méi)那么明顯等。而控制這種縮放力度的參數(shù)為[0,1)。在公式中,。This method allows the current probability distribution to be rescaled, for example by making larger probabilities larger, making smaller ones smaller, or making the difference between large and small probabilities less significant, etc. The parameter that controls the strength of this scaling is [0,1). In the equation,.

- 當(dāng)變大時(shí),模型在生成文本時(shí)更傾向于比較少見(jiàn)的詞匯。越大,重新縮放后的分布就越接近均勻采樣。As becomes larger, the model favours less common words when generating text. The largeris, the closer the rescaled distribution is to uniform sampling.

-

當(dāng)變小時(shí),模型在生成文本時(shí)更傾向于常見(jiàn)的詞。越大,重新縮放后的分布就越接近我們最開(kāi)始提到的貪婪生成方法(即總是去選擇概率最高的那個(gè)詞)。When becomes small, the model tends to favour common words when generating text. The largeris, the closer the rescaled distribution is to the greedy search strategy we mentioned at the beginning (i.e. always going for the word with the highest probability).

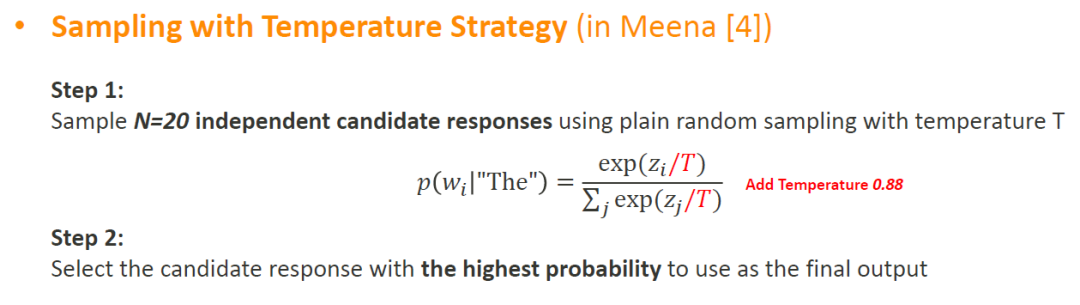

在Meena相關(guān)的論文中是這樣使用這種策略的(This strategy is used in the relevant Meena paper in the following way):

- 針對(duì)同一段輸入,論文讓模型使用這種策略生成20個(gè)不同的文本回復(fù)。For the same input, the paper has the model generate 20 different text responses using this strategy.

-

然后從這20個(gè)句子中,挑選出整個(gè)句子概率最大的作為最終輸出。Then from these 20 sentences, the one with the highest probability for the whole sentence is selected as the final output.

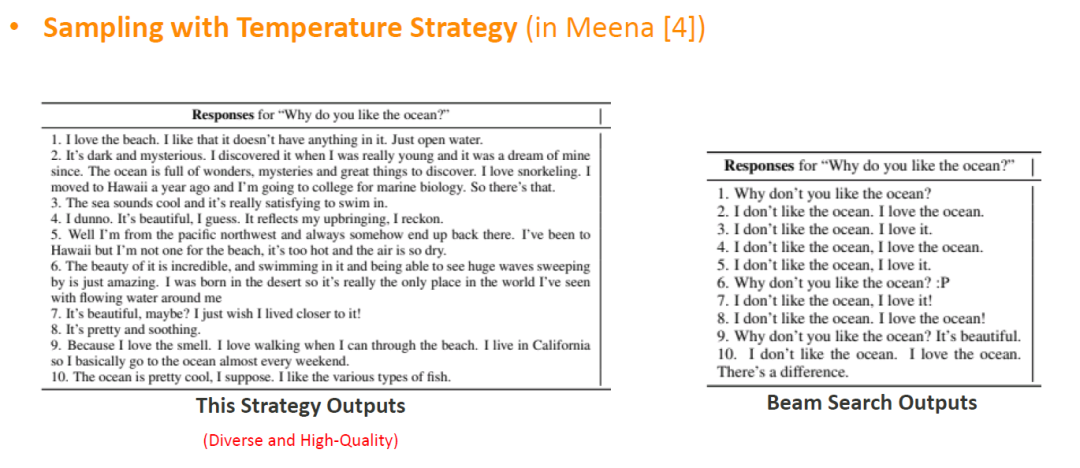

使用上述方法生成的句子明顯比使用Beam搜索策略生成的句子更加多樣化、高質(zhì)量。The sentences generated using the above method are significantly more diverse and of higher quality than those generated using the Beam search strategy.

使用上述方法生成的句子明顯比使用Beam搜索策略生成的句子更加多樣化、高質(zhì)量。The sentences generated using the above method are significantly more diverse and of higher quality than those generated using the Beam search strategy.

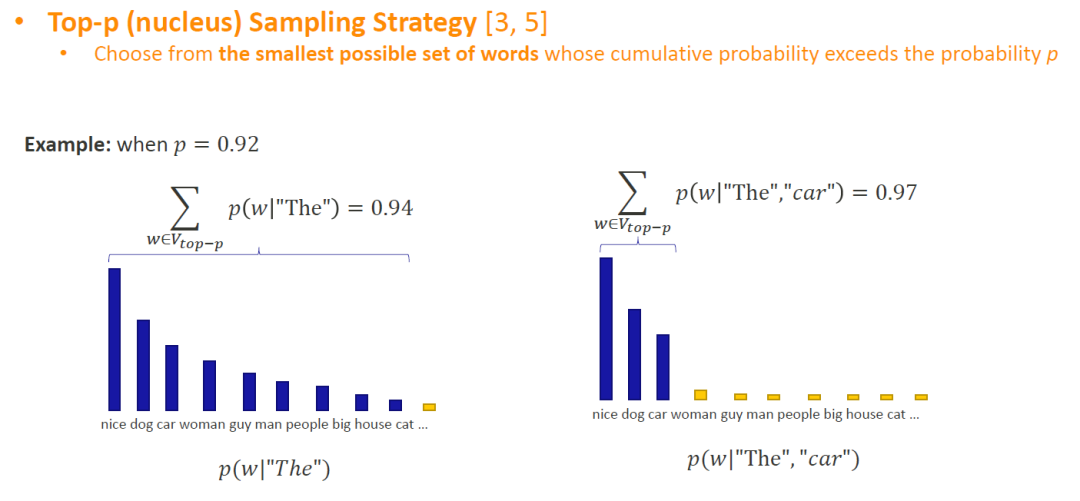

3.6 Top-p (Nucleus) Sampling

在top-k采樣的方法中,我們把可采樣的范圍的限制非常的嚴(yán)格。例如“Top-5”表示我們只能在排名前5位的詞進(jìn)行采樣。這樣其實(shí)會(huì)有潛在的問(wèn)題 (In the top-k sampling method, we strictly limit the range of words that can be sampled. For example, "Top-5" means that we can only sample the top 5 words in the rankings. This has the potential to be problematic):

- 排在第5位之后的詞也有可能是概率值并不算小的詞,但是我們把這些詞永遠(yuǎn)的錯(cuò)過(guò)了,而這些詞很有可能也是非常不錯(cuò)的選擇。Words after the 5th position are also likely to be words with high probability values, but we miss these words forever when they are likely to be good choices as well.

-

排在5位之內(nèi)的詞也有可能是概率值并不高的詞,但是我們也把他們考慮進(jìn)來(lái)了,而這些詞很有可能會(huì)降低文本質(zhì)量。Words ranked within the top 5 may also be words that do not have a high probability value, but we have taken them into account and they are likely to reduce the quality of the text.

在Top-p這種方法中,我們通過(guò)設(shè)置一個(gè)閾值()來(lái)達(dá)到讓取詞的范圍可以動(dòng)態(tài)地自動(dòng)調(diào)整的效果:我們把排序后的詞表從概率值最高的開(kāi)始算起,一直往后累加,一直到我們累加的概率總值超過(guò)閾值為止。在閾值內(nèi)的所有詞便是采樣取詞范圍。In the Top-p method, we make the range of words taken dynamically adjustable by setting a threshold (): we add the probabilities in the word list starting with the highest probability value and keep adding them up until the total value of the probabilities we have accumulated exceeds the threshold. All words within the threshold are in the sampling range.

假設(shè),我們?cè)O(shè)置的閾值為0.92(Suppose, we set a threshold of0.92):

- 在左圖中,前9個(gè)詞的概率加起來(lái)才超過(guò)了0.92。In the left figure, the probabilities of the first 9 words add up to more than 0.92.

-

在右圖中,前3個(gè)詞的概率和就可以超過(guò)0.92。In the right figure, the probabilities of the first 3 words can sum to more than 0.92.

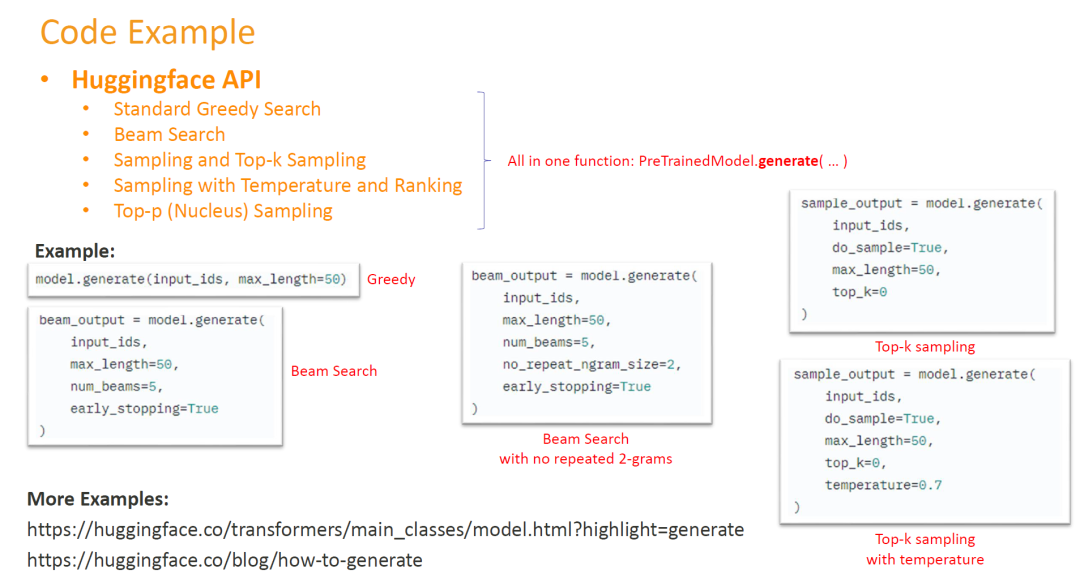

4 代碼快覽(Code Tips)

圖中展示了部分Huggingface接口示例。我們可以看的出來(lái),盡管在這篇文章中提到了不同的方法,但是它們之間并不是完全孤立的。有些方法是可以混合使用的,這也是為什么我們可以在代碼中可以同時(shí)設(shè)置多個(gè)參數(shù)。The figure shows a partial example of the Huggingface interface. As we can see, despite the different methods mentioned in this post, they are not completely separated from each other. Some of the methods are mixable, which is why we can have more than one argument in the code at the same time.

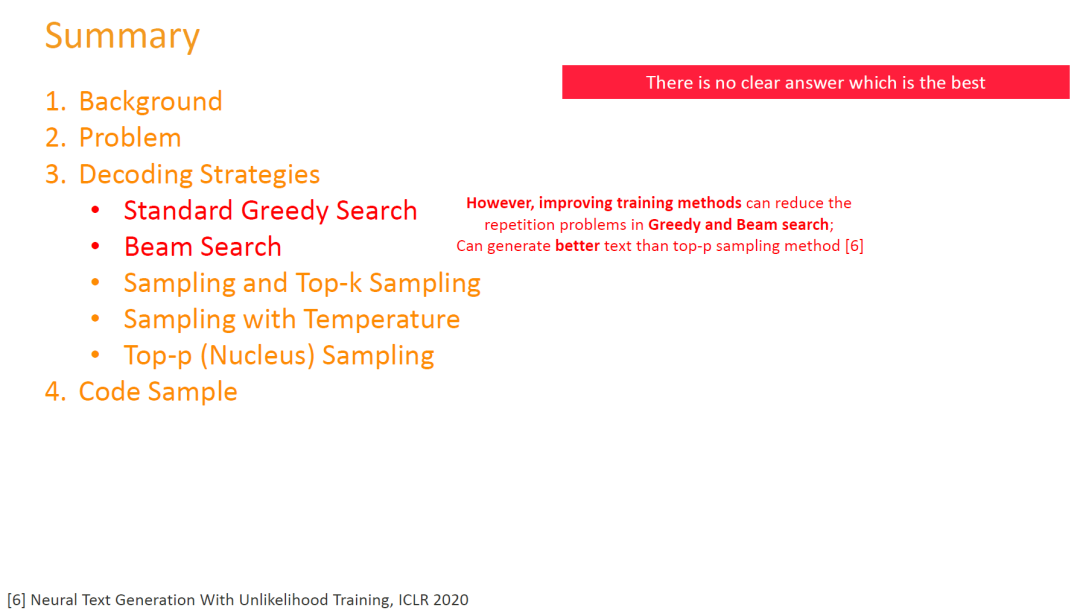

5 總結(jié) (Summary)

文章中介紹了一些常用的解碼策略,但是其實(shí)很難評(píng)價(jià)到底哪一個(gè)是最好的。Some common decoding strategies are described in the post, and it is actually difficult to evaluate which one is actually the best.

一般來(lái)講,在開(kāi)放領(lǐng)域的對(duì)話系統(tǒng)中,基于采樣的方法是好于貪婪和Beam搜索策略的。因?yàn)檫@樣的方法生成的文本質(zhì)量更高、多樣性更強(qiáng)。In general, sampling-based approaches are preferable to greedy and Beam search strategies in open-domain dialogue systems. This is because such an approach generates higher quality and more diverse text.

但這并不意味著我們徹底放棄了貪婪和Beam搜索策略,因?yàn)橛醒芯孔C明,經(jīng)過(guò)良好的訓(xùn)練,這兩種方法是可以生成比Top-p采樣策略更好的文本。However, this does not mean that we completely abandon the greedy and Beam search strategies, as it has been shown that, with proper training, these two methods are capable of generating better text than the Top-p sampling strategy.

自然語(yǔ)言處理之路還有很長(zhǎng)很長(zhǎng),繼續(xù)加油吧~ There is still a long, long way to go in natural language processing research. Keep working hard!

“小提醒 (Note):使用原創(chuàng)文章內(nèi)容前請(qǐng)先閱讀說(shuō)明(菜單→所有文章)Please read the instructions before using any original post content (Menu → All posts) or contact me if you have any questions.

”

審核編輯 :李倩

-

解碼

+關(guān)注

關(guān)注

0文章

185瀏覽量

27789 -

模型

+關(guān)注

關(guān)注

1文章

3500瀏覽量

50102 -

文本

+關(guān)注

關(guān)注

0文章

119瀏覽量

17387

原文標(biāo)題:通俗理解文本生成的常用解碼策略

文章出處:【微信號(hào):zenRRan,微信公眾號(hào):深度學(xué)習(xí)自然語(yǔ)言處理】歡迎添加關(guān)注!文章轉(zhuǎn)載請(qǐng)注明出處。

發(fā)布評(píng)論請(qǐng)先 登錄

高級(jí)檢索增強(qiáng)生成技術(shù)(RAG)全面指南

如何構(gòu)建文本生成器?如何實(shí)現(xiàn)馬爾可夫鏈以實(shí)現(xiàn)更快的預(yù)測(cè)模型

循環(huán)神經(jīng)網(wǎng)絡(luò)卷積神經(jīng)網(wǎng)絡(luò)注意力文本生成變換器編碼器序列表征

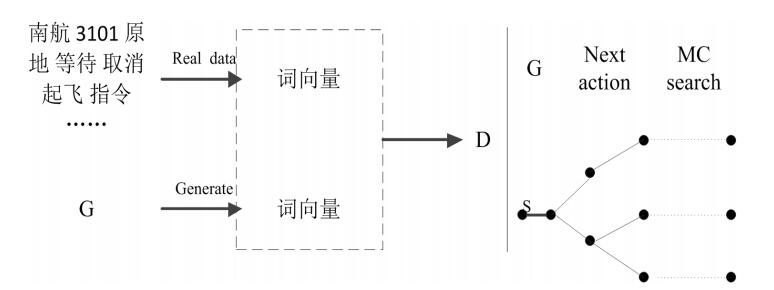

基于生成對(duì)抗網(wǎng)絡(luò)GAN模型的陸空通話文本生成系統(tǒng)設(shè)計(jì)

基于生成器的圖像分類對(duì)抗樣本生成模型

基于生成式對(duì)抗網(wǎng)絡(luò)的深度文本生成模型

文本生成任務(wù)中引入編輯方法的文本生成

受控文本生成模型的一般架構(gòu)及故事生成任務(wù)等方面的具體應(yīng)用

基于GPT-2進(jìn)行文本生成

基于VQVAE的長(zhǎng)文本生成 利用離散code來(lái)建模文本篇章結(jié)構(gòu)的方法

ETH提出RecurrentGPT實(shí)現(xiàn)交互式超長(zhǎng)文本生成

面向結(jié)構(gòu)化數(shù)據(jù)的文本生成技術(shù)研究

通俗理解文本生成的常用解碼策略

通俗理解文本生成的常用解碼策略

評(píng)論