使用 Raspberry PiAI 套件進(jìn)行非結(jié)構(gòu)化數(shù)據(jù)處理——Hailo邊緣AI

非結(jié)構(gòu)化數(shù)據(jù)處理、Raspberry Pi 5、Raspberry Pi AI套件、Milvus、Zilliz、數(shù)據(jù)、圖像、計(jì)算機(jī)視覺、深度學(xué)習(xí)、Python

在邊緣實(shí)時(shí)相機(jī)流中檢測、顯示和存儲(chǔ)檢測到的圖像

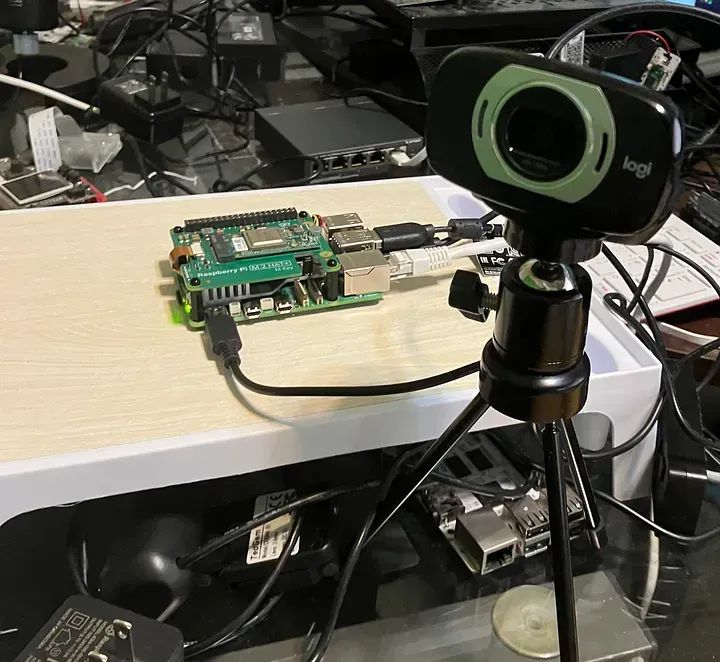

即使預(yù)算有限,你也可以利用像配備8GB內(nèi)存的Raspberry Pi 5和NVIDIA Jetson Orin Nano這樣的強(qiáng)大設(shè)備,開始構(gòu)建一些邊緣AI用例。最近,針對RPI5平臺的Raspberry Pi AI套件發(fā)布了,所以我必須入手一個(gè)并嘗試一下。

AI套件增加了一個(gè)神經(jīng)網(wǎng)絡(luò)推理加速器,其性能可達(dá)每秒13萬億次操作(TOPS),對于70美元的價(jià)格來說,這相當(dāng)不錯(cuò)。連接到這個(gè)M.2 Hat的是Hailo-8L M.2入門級加速模塊,它將為我們提供AI能力。

在第一次演示中,我修改了提供的RPI5 Hailo AI Python示例之一,以對網(wǎng)絡(luò)攝像頭進(jìn)行實(shí)時(shí)圖像檢測,然后將檢測結(jié)果發(fā)送到Slack頻道,更重要的是,將檢測結(jié)果與元數(shù)據(jù)一起向量化并存儲(chǔ)到Milvus中。

在Raspberry Pi 5上實(shí)時(shí)運(yùn)行

我們使用了Hailo提供的RPI5對象檢測程序示例,并對其進(jìn)行了增強(qiáng),以便將結(jié)果發(fā)送到Slack、MiNio和Milvus。

因此,我們使用了示例對象檢測程序,但首先我為Slack、Milvus、S3、TIMM、Sci-Kit Learn、Pytorch和UUID庫添加了一些導(dǎo)入語句。我還設(shè)置了一些稍后要使用的常量。然后,我們連接到我們的Milvus服務(wù)器和Slack頻道,并開始GStreamer循環(huán)。我設(shè)置了一個(gè)時(shí)間檢查,如果檢測到內(nèi)容,我會(huì)將相機(jī)幀保存到文件中,然后上傳到S3并發(fā)送到我的Slack頻道。最后,我添加了向量化的圖像以及S3路徑、文件名、標(biāo)簽和置信度等重要元數(shù)據(jù)。我們的集合中的每個(gè)條目都會(huì)獲得一個(gè)自動(dòng)生成的ID。

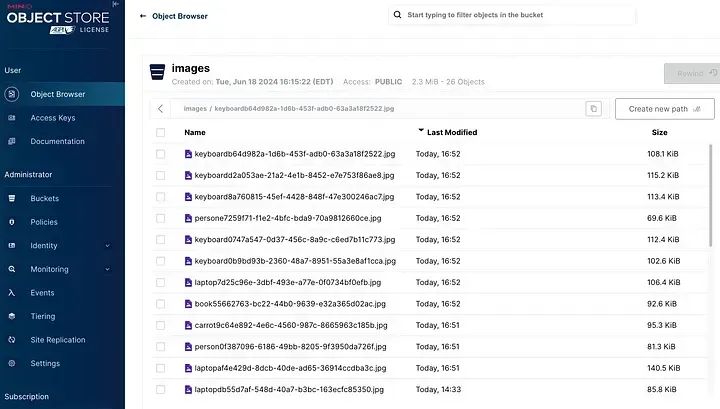

我們的圖像已經(jīng)上傳到MinIO:

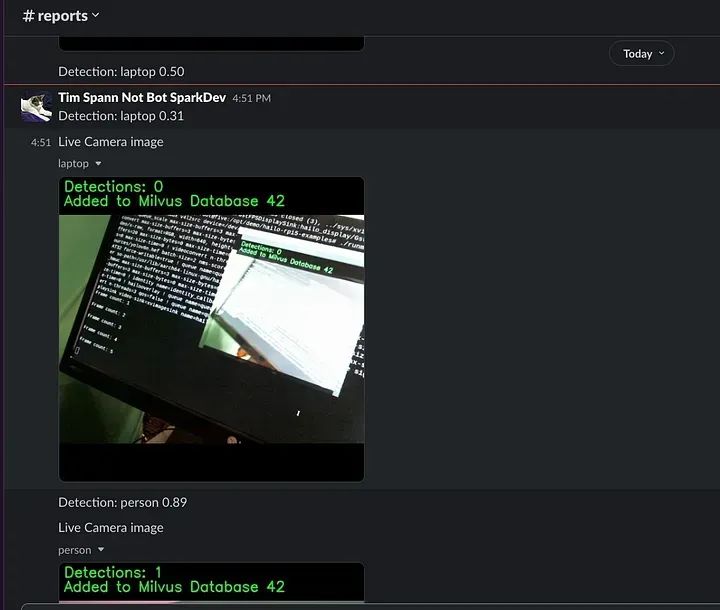

它們也已隨我們的文本消息發(fā)送到我們的#reports Slack頻道。

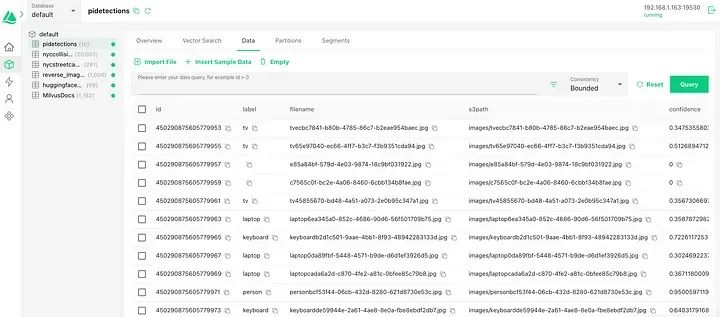

最重要的是,我們的元數(shù)據(jù)和向量已經(jīng)上傳,并且已經(jīng)可以用于超快速搜索。

現(xiàn)在我們可以開始查詢我們的向量,我會(huì)通過Jupyter筆記本向你展示如何操作

查詢數(shù)據(jù)庫并顯示圖像

加載In [1]:

!pip install boto3

Requirement already satisfied: boto3 in ./milvusvenv/lib/python3.12/site-packages (1.34.129)

Requirement already satisfied: botocore<1.35.0,>=1.34.129 in ./milvusvenv/lib/python3.12/site-packages (from boto3) (1.34.129)

Requirement already satisfied: jmespath<2.0.0,>=0.7.1 in ./milvusvenv/lib/python3.12/site-packages (from boto3) (1.0.1)

Requirement already satisfied: s3transfer<0.11.0,>=0.10.0 in ./milvusvenv/lib/python3.12/site-packages (from boto3) (0.10.1)

Requirement already satisfied: python-dateutil<3.0.0,>=2.1 in ./milvusvenv/lib/python3.12/site-packages (from botocore<1.35.0,>=1.34.129->boto3) (2.9.0.post0)

Requirement already satisfied: urllib3!=2.2.0,<3,>=1.25.4 in ./milvusvenv/lib/python3.12/site-packages (from botocore<1.35.0,>=1.34.129->boto3) (2.2.1)

Requirement already satisfied: six>=1.5 in ./milvusvenv/lib/python3.12/site-packages (from python-dateutil<3.0.0,>=2.1->botocore<1.35.0,>=1.34.129->boto3) (1.16.0)

In [5]:

from __future__ import print_functionimport requestsimport sysimport ioimport jsonimport shutilimport sysimport datetimeimport subprocessimport sysimport osimport mathimport base64from time import gmtime, strftimeimport random, stringimport timeimport psutilimport base64import uuidimport socketimport osfrom pymilvus import connectionsfrom pymilvus import utilityfrom pymilvus import FieldSchema, CollectionSchema, DataType, Collectionimport torchfrom torchvision import transformsfrom PIL import Imageimport timmfrom sklearn.preprocessing import normalizefrom timm.data import resolve_data_configfrom timm.data.transforms_factory import create_transformfrom pymilvus import MilvusClientimport osfrom IPython.display import display

In [6]:

from __future__ import print_functionimport requestsimport sysimport ioimport jsonimport shutilimport sysimport datetimeimport subprocessimport sysimport osimport mathimport base64from time import gmtime, strftimeimport random, stringimport timeimport psutilimport base64import uuidimport socketimport osfrom pymilvus import connectionsfrom pymilvus import utilityfrom pymilvus import FieldSchema, CollectionSchema, DataType, Collectionimport torchfrom torchvision import transformsfrom PIL import Imageimport timmfrom sklearn.preprocessing import normalizefrom timm.data import resolve_data_configfrom timm.data.transforms_factory import create_transformfrom pymilvus import MilvusClientimport osfrom IPython.display import display

In [8]:

# -----------------------------------------------------------------------------

class FeatureExtractor: def __init__(self, modelname): # Load the pre-trained model self.model = timm.create_model( modelname, pretrained=True, num_classes=0, global_pool="avg" ) self.model.eval()

# Get the input size required by the model self.input_size = self.model.default_cfg["input_size"]

config = resolve_data_config({}, model=modelname) # Get the preprocessing function provided by TIMM for the model self.preprocess = create_transform(**config)

def __call__(self, imagepath): # Preprocess the input image input_image = Image.open(imagepath).convert("RGB") # Convert to RGB if needed input_image = self.preprocess(input_image)

# Convert the image to a PyTorch tensor and add a batch dimension input_tensor = input_image.unsqueeze(0)

# Perform inference with torch.no_grad(): output = self.model(input_tensor)

# Extract the feature vector feature_vector = output.squeeze().numpy()

return normalize(feature_vector.reshape(1, -1), norm="l2").flatten()

In [9]:

extractor = FeatureExtractor("resnet34")

# -----------------------------------------------------------------------------# Constants - should be environment variables# -----------------------------------------------------------------------------DIMENSION = 512 MILVUS_URL = "http://192.168.1.163:19530" COLLECTION_NAME = "pidetections"BUCKET_NAME = "images"DOWNLOAD_DIR = "/Users/timothyspann/Downloads/code/images/"AWS_RESOURCE = "s3"S3_ENDPOINT_URL = "http://192.168.1.163:9000"AWS_ACCESS_KEY = "minioadmin" AWS_SECRET_ACCESS_KEY = "minioadmin"S3_SIGNATURE_VERSION = "s3v4"AWS_REGION_NAME = "us-east-1"S3_ERROR_MESSAGE = "Download failed"# -----------------------------------------------------------------------------

In [10]:

# -----------------------------------------------------------------------------# Connect to Milvus

# Local Docker Servermilvus_client = MilvusClient( uri=MILVUS_URL)# -----------------------------------------------------------------------------

In [12]:

import osimport boto3from botocore.client import Config

# -----------------------------------------------------------------------------# Access Images on S3 Compatible Store - AWS S3 or Minio or ...# -----------------------------------------------------------------------------s3 = boto3.resource(AWS_RESOURCE, endpoint_url=S3_ENDPOINT_URL, aws_access_key_id=AWS_ACCESS_KEY, aws_secret_access_key=AWS_SECRET_ACCESS_KEY, config=Config(signature_version=S3_SIGNATURE_VERSION), region_name=AWS_REGION_NAME)

bucket = s3.Bucket(BUCKET_NAME)

# -----------------------------------------------------------------------------# Get last modified image# -----------------------------------------------------------------------------files = bucket.objects.filter()files = [obj.key for obj in sorted(files, key=lambda x: x.last_modified, reverse=True)]

for imagename in files: query_image = imagename break

search_image_name = DOWNLOAD_DIR + query_image

try: s3.Bucket(BUCKET_NAME).download_file(query_image, search_image_name)except botocore.exceptions.ClientError as e: print(S3_ERROR_MESSAGE)

# -----------------------------------------------------------------------------# Search Milvus for that vector and filter by a label# -----------------------------------------------------------------------------results = milvus_client.search( COLLECTION_NAME, data=[extractor(search_image_name)], filter='label in ["keyboard"]', output_fields=["label", "confidence", "id", "s3path", "filename"], search_params={"metric_type": "COSINE"}, limit=5)

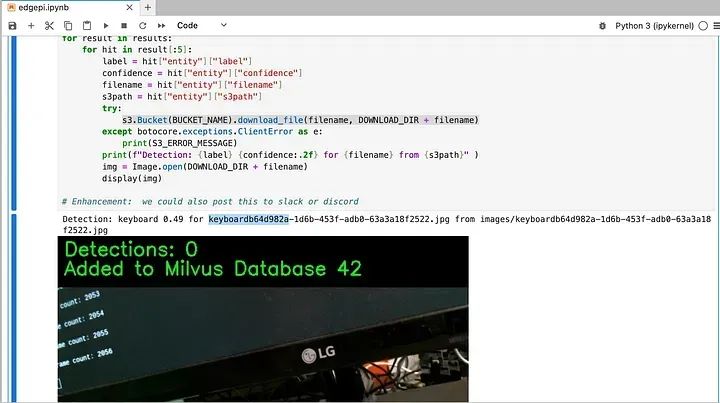

# -----------------------------------------------------------------------------# Iterate through last five results and display metadata and image# -----------------------------------------------------------------------------for result in results: for hit in result[:5]: label = hit["entity"]["label"] confidence = hit["entity"]["confidence"] filename = hit["entity"]["filename"] s3path = hit["entity"]["s3path"] try: s3.Bucket(BUCKET_NAME).download_file(filename, DOWNLOAD_DIR + filename) except botocore.exceptions.ClientError as e: print(S3_ERROR_MESSAGE) print(f"Detection: {label} {confidence:.2f} for {filename} from {s3path}" ) img = Image.open(DOWNLOAD_DIR + filename) display(img) # Enhancement: we could also post this to slack or discord

詳細(xì)代碼請查看GitHub:https://gist.github.com/tspannhw/8e2ec1293c1cff1edaefbf7fde54f47a#file-edgeaifind-ipynb

我已經(jīng)錄制了這個(gè)演示的運(yùn)行過程,所以你可以看到實(shí)時(shí)的操作情況。

如果你購買了一個(gè)并想設(shè)置它以復(fù)制我的演示,請參閱本文末尾的步驟。

演示打包清單

MinIO/S3、Milvus、Slack、Python、Boto3、OpenCV2、Pytorch、Sci-Kit Learn、TIMM、Hailo、YOLOv6n、對象檢測、Raspberry PiAI套件、配備8GB內(nèi)存的Raspberry Pi5、logi網(wǎng)絡(luò)攝像頭、resnet34、Torchvision、PyMilvus、Hailo8L M.2模塊、M.2 M-Key Hat、散熱片。

入門指南

添加硬件(請參閱下面的視頻和鏈接)后,安裝庫,重啟,然后你就應(yīng)該準(zhǔn)備好了。

tspann@five:/opt/demo $ hailortcli fw-control identify

Executing on device: 000000.0Identifying boardControl Protocol Version: 2Firmware Version: 4.17.0 (release,app,extended context switch buffer)Logger Version: 0Board Name: Hailo-8Device Architecture: HAILO8LSerial Number: HLDDLBB241601635Part Number: HM21LB1C2LAEProduct Name: HAILO-8L AI ACC M.2 B+M KEY MODULE EXT TMP

tspann@five:/opt/demo $ dmesg | grep -i hailo

[ 3.155152] hailo: Init module. driver version 4.17.0[ 3.155295] hailo 0000:01:00.0: Probing on: 1e60:2864...[ 3.155301] hailo 0000:01:00.0: Probing: Allocate memory for device extension, 11600[ 3.155321] hailo 0000:01:00.0: enabling device (0000 -> 0002)[ 3.155327] hailo 0000:01:00.0: Probing: Device enabled[ 3.155350] hailo 0000:01:00.0: Probing: mapped bar 0 - 0000000095e362ea 16384[ 3.155357] hailo 0000:01:00.0: Probing: mapped bar 2 - 000000005e2b2b7e 4096[ 3.155362] hailo 0000:01:00.0: Probing: mapped bar 4 - 000000008db50d03 16384[ 3.155365] hailo 0000:01:00.0: Probing: Force setting max_desc_page_size to 4096 (recommended value is 16384)[ 3.155375] hailo 0000:01:00.0: Probing: Enabled 64 bit dma[ 3.155378] hailo 0000:01:00.0: Probing: Using userspace allocated vdma buffers[ 3.155382] hailo 0000:01:00.0: Disabling ASPM L0s[ 3.155385] hailo 0000:01:00.0: Successfully disabled ASPM L0s[ 3.417111] hailo 0000:01:00.0: Firmware was loaded successfully[ 3.427885] hailo 0000:01:00.0: Probing: Added board 1e60-2864, /dev/hailo0

額外指令

gst-inspect-1.0 hailotoolslspci | grep Hailouname -av4l2-ctl --list-formats-ext -d /dev/video0ls /dev/video*ffplay -f v4l2 /dev/video0

-

AI

+關(guān)注

關(guān)注

88文章

35016瀏覽量

278821 -

樹莓派

+關(guān)注

關(guān)注

121文章

1995瀏覽量

107368 -

邊緣AI

+關(guān)注

關(guān)注

0文章

155瀏覽量

5437

發(fā)布評論請先 登錄

如何將樹莓派變成一個(gè)FM的音頻發(fā)射器

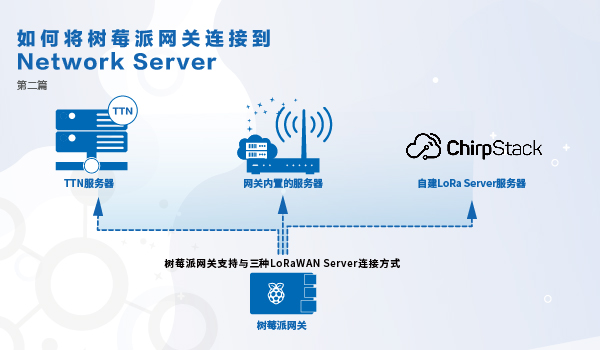

手把手教你如何將樹莓派網(wǎng)關(guān)鏈接到服務(wù)器之第二篇

如何將樹莓派網(wǎng)關(guān)與外網(wǎng)連接——手把手教你如何將樹莓派網(wǎng)關(guān)連接到服務(wù)器之第三篇

如何將一個(gè)樹莓派官方原始系統(tǒng)鏡像移植到paipai one設(shè)備

樹莓派基本設(shè)置流程(下)

手把手教你如何將樹莓派網(wǎng)關(guān)鏈接到服務(wù)器之第二篇

如何將樹莓派網(wǎng)關(guān)與外網(wǎng)連接——手把手教你如何將樹莓派網(wǎng)關(guān)連接到服務(wù)器之第三篇

如何將樹莓派網(wǎng)關(guān)連接到TTN——手把手教你如何將樹莓派網(wǎng)關(guān)連接到服務(wù)器之第四篇

如何將樹莓派網(wǎng)關(guān)連接到內(nèi)置LoRaWAN? Network Server ——手把手教你如何將樹莓派網(wǎng)關(guān)連接到服務(wù)器之第五篇

如何將WizFi360 EVB Mini添加到樹莓派Pico Python

樹莓派“吉尼斯世界記錄”:將樹莓派的性能發(fā)揮到極致的項(xiàng)目!

樹莓派分類器:用樹莓派識別不同型號的樹莓派!

樹莓派AI套件:如何將混亂的數(shù)據(jù)變成有序的魔法

樹莓派AI套件:如何將混亂的數(shù)據(jù)變成有序的魔法

評論